About Simurg

Simurg environment represents a unique easily-deployable and operatable software platform that seamlessly merges novel model-based techniques and algorithms with existing well-established software and workflows in a convenient GUI in order to perform the broadest spectrum of model-based analyses relevant for quantitative pharmacology.

Objectives

- Data handling and processing.

- Exploratory data analysis and quality check.

- Solving the direct problem for mathematical models based on various types of differential equations.

- Parameter estimation procedures for non-linear and linear systems with or without random effects.

- Development of regression models for various types of data (binary, categorical, etc.).

- Meta-analysis and meta-regression.

- Model development in Bayesian paradigm.

- Generation of reports based on the results of the analyses.

Simurg environment modules

- Data management module - semi-automatic data processing, visualization and quality check.

- NLME module - mathematical modeling of dynamical data using hierarchical modeling with frequentist and Bayesian approach suitable for both empirical and mechanistic models.

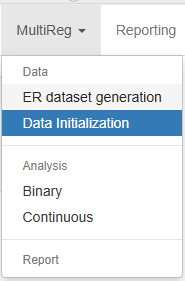

- MultiReg module - expands the range of data types and associated mathematical methods a modeler can use within Simurg environment.

- Reporting module - compile and update modeling reports in various formats.

Access to Simurg - internal servers

Requesting access

- Create a request using this form

- Wait for an e-mail - contains a link to the server and credentials

Accessing the environment

- Follow the link to the relevant server (see the list below)

- Fill in the user name

- Fill in the password

- Press "Sign in"

- Select "Simurg"

- Select number of cores and RAM

List of servers:

Technical support

Use this form to provide feedback or report technical issues.

About Data management module

Background

Model-based analyses aim to establish quantitative relationships between different entities. These relationships are inherently data-driven, meaning they can only be as accurate and reliable as the underlying data allows. Consequently, a thorough evaluation of the data is essential before initiating any modeling efforts. Furthermore, data used in these analyses can come in various shapes and forms, following CDISC, software-specific or company-specific standards. Thus, a modeler should be equipped with a tool to perform convenient transitions from one type of data standard to another, visualize different types of data, and scan the data for potential errors and outliers.

Objectives

- CDISC-compliant semi-automatic data processing.

- Visualization of all types of data in different shapes and forms.

- Quality check of the data.

Sections of the module

Data

The Data section is the starting point for preparing your dataset for model-based analysis. It allows you to upload, explore, and structure your data by selecting key variables such as ID, time, and dependent values. You can also create or remove columns, filter the dataset, and classify covariates as continuous, categorical, or time-varying. These preparatory steps ensure your dataset is properly organized for further analysis and quality checks within the Data Management module.

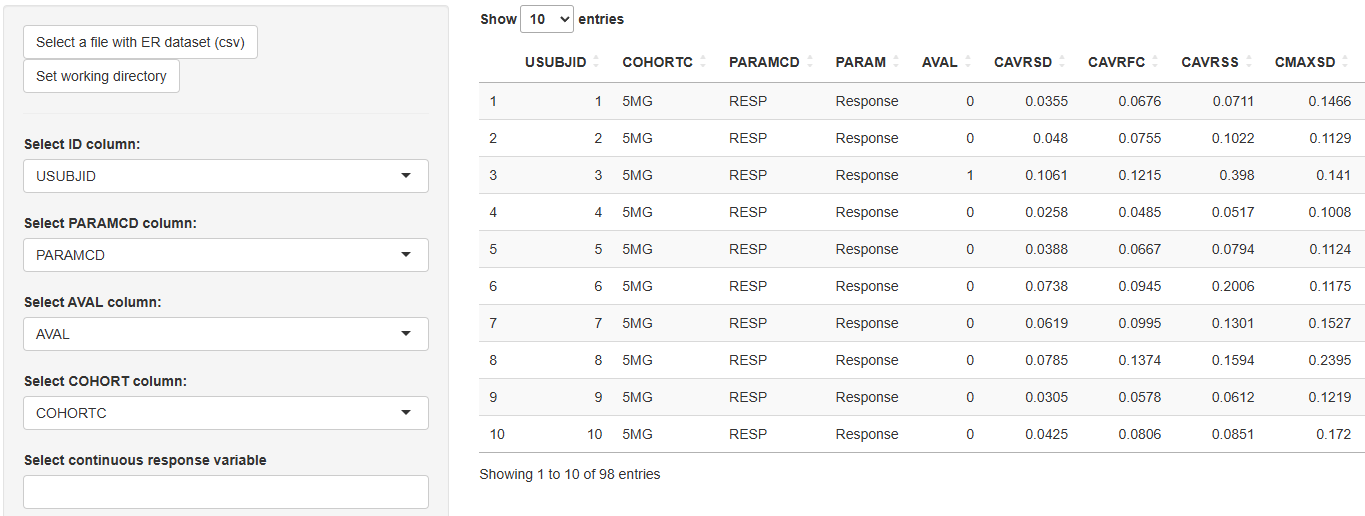

To begin working with this module, load a dataset into the environment by clicking the  button. The environment supports datasets in

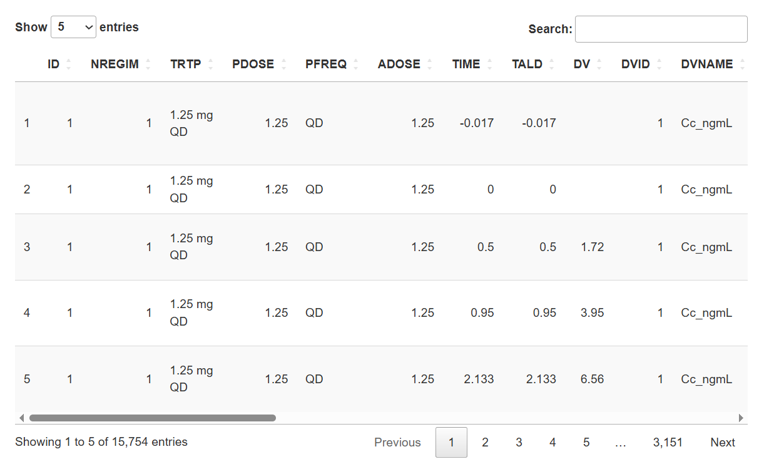

button. The environment supports datasets in .csv format. Once uploaded, the dataset will be displayed in the main panel on the right side of the screen:

Before proceeding, it is recommended to define the directory where you wish to save all outputs generated during this session. This can be done by clicking the button. If this step is skipped, you will be prompted to select a directory the first time you attempt to save any results. Setting the directory at this stage ensures a smoother workflow throughout the module.

Column Specification

Once the dataset is visible in the main panel, the next step is to identify key structural columns. Use the following dropdown menus to specify:

-

ID Column - dentifies the subject or observational unit.

-

TIME Column - indicates the independent time variable.

-

DV Column - corresponds to the dependent variable (e.g., concentration, biomarker, etc.).

Each dropdown will list the available columns in your dataset, allowing for direct selection.

Data Modification

In this section, you may also perform various data manipulation tasks, including:

-

Adding a new column: Use the "Add Column" window to create a new variable using R syntax. For example:

- To create a character column from a numeric variable:

SEXC = as.character(SEX) - To calculate a summary value like the mean of a column:

BMI_mean = mean(BMI)

If the formula is incorrectly specified, a warning window will inform you of the syntax issue.

- To create a character column from a numeric variable:

-

Removing columns: Use the "Remove one or more columns" window to select and delete any variables that are no longer needed.

-

Filtering the dataset: A filtering option is also available to work with a subset of the original dataset if required.

Covariate Classification

To facilitate downstream tasks, especially quality checks and exploratory analyses, you may specify different types of covariates using the following windows:

-

Select all columns with continuous covariates

-

Select all columns with categorical covariates

-

Select all columns with time-varying covariates, here you specify which columns, previously selected as continuous or categorical covariate, are time-varying.

While specifying these is optional for general dataset work, they are required to activate functionalities in the Quality Check section of the Data Management module.

Dataset Initialization and Saving

Once all necessary specifications and transformations are complete:

-

Click the

button to apply the selected column designations and any modifications. This step activates access to the subsequent sections of the module.

-

If you've made changes and want to preserve this updated version of the dataset, click the

button. The file will be saved in the defined directory as a

.csvfile.

Once you have successfully initialized the dataset, you are ready to proceed to any of the other available sections within the Data Management module: Continuous Data, Covariates, Dosing Events, and Tables. These sections offer specific tools to further exploratory data analysis.

Please note that the Quality Check section will only be accessible if you have specified the covariates (continuous, categorical, or time-varying) in the current section. If no covariates have been defined, this section will remain disabled.

Data Quality Check

Before beginning any modeling or analysis, it is essential to ensure the integrity and consistency of the dataset. The Quality Check section of the Data Management module provides automated tools to detect common issues such as missing values, inconsistent dosing records, and irregular time patterns. Addressing these potential problems early in the workflow is critical for ensuring reliable model performance and avoiding biased or misleading results.

Once your dataset has been properly initialized in the Data section and covariate columns have been appropriately defined, the Quality Check section becomes active and available for use.

The data checks are organized into three dedicated tabs, each focusing on a specific type of data:

1. Covariates

Once the dataset has been initialized and continuous, categorical, and (if present) time-varying covariate columns have been declared in the Data section, the Covariates tab becomes active. It summarises seven automated checks, each accompanied by contextual messages and—when appropriate—tables that highlight the issues detected.

-

Declared continuous covariates

- Lists the continuous covariates provided by the user.

- If none were specified, the message “The user has not specified continuous covariates.” is shown.

-

Declared categorical covariates

- Analogous to point 1 but for categorical covariates.

- If none were specified, a corresponding message is displayed.

-

Missing-value scan

Searches the declared covariate columns for empty cells.

- If empties are found, the message "Empty cells were found in these columns and IDs:" appears, followed by a table listing the affected columns and IDs.

- Otherwise, "User-specified covariates contain no empty cells."

-

Time-varying columns change check

Verifies that each user-defined time-varying covariate truly varies within an ID.

-

Outcomes:

- "All user-defined time-varying columns change over time."

- "User-defined time-varying columns that don’t change over time:" (followed by offending column names).

- If no time-varying covariates were declared: "The user has not specified time-varying covariates."

-

-

Time-invariant stability check

Display a list of considered time invariant covariates and ensures that covariates expected to be constant within an ID do not drift over time.

- Outcomes:

- "All time invariant columns don't change over time."

- "Time invariant columns that change over time: " (followed by offending column names).

- Outcomes:

-

Invariant-covariate diagnostics Three complementary tests are run:

6.1 Balance of categorical covariates

Flags any categorical covariate where a level represents < 15 % of IDs.

- Shows "Uneven distribution of covariate levels in columns:" plus a table of covariate/level pairs.

- If no imbalance: "There is no significant imbalance detected in the distribution of levels among the categorical covariates."

6.2 Outlier detection in continuous covariates

Usual outliers: values outside \([Q1 - 1.5 × IQR\) ; \(Q3 + 1.5 × IQR]\) 1. Distant outliers: values outside \([Q1 - 2.5 × IQR\) ; \(Q3 + 2.5 × IQR]\) and beyond the 5th/95th percentiles.

- If found, the message "Possible outliers in continuous data:" appears with a three-column table: covariate, IDs with usual outliers, and IDs with distant outliers.

- Otherwise: "No possible outliers were detected."

6.3 Potential imputations in baseline covariates

For continuous covariates not marked as time-varying, the routine looks for values repeated in > 10 % of IDs—a sign of bulk imputation.

- If detected, the message "Potential imputation for baseline covariate > 10 %:" appears with a table of covariates and affected IDs.

- If none: "No imputations greater than 10 % were detected."

-

LOCF detection in time-varying covariates

Aims to identify possible Last Observation Carried Forward (LOCF) practices, where a value is repeated across successive time points.

- If any time-varying covariate shows > 3 consecutive identical values within an ID, those IDs and covariates are reported.

- Otherwise: "No LOCF were detected."

2. Dosing events

The Dosing events tab evaluates the internal consistency of all dose-related columns. For full functionality, the dataset should contain the following fields:

AMT– dose amountEVID– event identifier (0 = observation, > 0 = dose or other event)MDV– missing-DV flag (0 = DV present, 1 = DV missing)CMT– compartment number receiving the dose orADM– administration type (Simurg accepts either)DUR– infusion duration

If one or more of these columns are absent, any check that relies on that column is skipped and the message "The column required for this check is missing." is shown.

-

Presence of required columns

Confirms that all five dose-related columns are in the dataset.

- If any are missing: "The following required columns for Dosing-event checks are missing in the dataset:" followed by the list.

- If none are missing: "All expected Dosing-event columns "AMT", "EVID", "MDV", "CMT", "ADM", "DUR" are present in the dataset."

-

AMT vs EVID consistency

Detects rows that combine an observation flag with a non-zero dose amount (

EVID = 0 & AMT ≠ 0), i.e., dose information placed in observation rows.- Inconsistencies: "Inconsistencies have been found between the AMT and EVID columns. Rule: EVID = 0 & AMT ≠ 0" plus a table of

ID,TIME,AMT,EVID. - None: "No inconsistencies have been found between the AMT and EVID columns."

- Inconsistencies: "Inconsistencies have been found between the AMT and EVID columns. Rule: EVID = 0 & AMT ≠ 0" plus a table of

-

Zero-dose events

Flags dosing rows that declare a dose amount of zero (

AMT = 0 & EVID ≠ 0).- Issues found: "Dose amount 0 in dose event. Rule: AMT = 0 & EVID ≠ 0" plus a table of

ID,TIME,AMT,EVID. - None: "No zero-dose amount for dose event."

- Issues found: "Dose amount 0 in dose event. Rule: AMT = 0 & EVID ≠ 0" plus a table of

-

EVID vs MDV coherence

Looks for dose events that also claim a non-missing dependent value in the same row (

EVID ≠ 0 & MDV = 0).- If present: "Possible inconsistencies have been found between the EVID and MDV columns. Rule: EVID ≠ 0 & MDV = 0" plus a table of

ID,TIME,MDV,EVID. - None: "No inconsistencies have been found between the EVID and MDV columns."

- If present: "Possible inconsistencies have been found between the EVID and MDV columns. Rule: EVID ≠ 0 & MDV = 0" plus a table of

-

AMT supplied without CMT/ADM

Checks for non-zero doses that lack a target compartment or administration type (

AMT ≠ 0 & (CMT = 0 | ADM = 0)).- Inconsistencies: "Dose amount without compartment (CMT) or administration type (ADM)" plus a table of

ID,TIME,AMT,CMT/ADM. - None: "No inconsistencies have been found between columns AMT and CMT or AMT and ADM."

- Inconsistencies: "Dose amount without compartment (CMT) or administration type (ADM)" plus a table of

-

Infusion-duration logic

Verifies that dose rows belonging to a given CMT/ADM are either all bolus (

DUR = 0) or all infusions (DUR > 0). Mixed usage triggers the warningAMT ≠ 0 & (all DUR = 0 | all DUR ≠ 0)- If violated: "Infusion duration time zero were detected" plus table of

ID,TIME,AMT,CMT/ADM,DUR. - None: "No issues found with infusion duration time."

- If violated: "Infusion duration time zero were detected" plus table of

-

Duplicate dose records

Identifies duplicate dosing rows—same

ID, sameTIME, sameCMT/ADM, and non-zeroAMT*.- Duplicates: "Duplicates: same time, same CMT or ADM, and AMT ≠ 0 were detected." plus table of

ID,TIME,AMT,CMT/ADM - None: "No duplicate time for same dose amount."

- Duplicates: "Duplicates: same time, same CMT or ADM, and AMT ≠ 0 were detected." plus table of

The information returned by these checks helps correct dosing-record errors before advancing to modelling or simulation steps.

3. Time series

The Time Series tab contains 8 specific checks that assess the quality and consistency of longitudinal observations across time. These checks help ensure that observational data are well-structured, logically consistent, and reliable for analysis.

To enable all the checks in this tab, the dataset must include the following columns:

ID– subject identifierTIME– time of observation or eventDV– dependent variable (measurement)DVID/YTYPE– type of measurement (Simurg accepts either)MDV– missing dependent variable flag (1 = missing, 0 = present)EVID– event identifier (0 = observation, >0 = event such as dosing)

If any of these columns are missing, the checks relying on them will be skipped, and the message “The column required for this check is missing” will appear in place of the corresponding results.

-

Presence of required columns

Confirms that all six time series columns are in the dataset.

- If any are missing: "The following required columns for time series checks are missing in the dataset:" followed by the list.

- If all are present: "All required columns "ID", "TIME", "DV", "DVID/YTYPE", "MDV", and "EVID" for time series checks are present in the dataset."

-

Missing data percentage (MDV == 1) per DVID

This check calculates the proportion of measurements marked as missing (

MDV = 1) for eachDVIDlevel.- Output: Table with columns

DVIDandMDV %

- Output: Table with columns

-

Empty or zero DV when MDV ≠ 1 and DVID ≠ 0 This flags rows where the dependent variable is missing or zero despite being marked as valid observations. Rule:

DVis empty or zero whileMDV ≠ 1andDVID ≠ 0.- If found: "IDs with empty or zero DV when MDV ≠ 1 and DVID ≠ 0:" plus table of

ID - Otherwise: "No IDs found with empty DV value for observation event."

- If found: "IDs with empty or zero DV when MDV ≠ 1 and DVID ≠ 0:" plus table of

-

Non-empty DV with EVID ≠ 0

This check detects non-observation events that improperly contain DV values. Rule:

EVID ≠ 0 & MDV = 0with a non-empty or non-zeroDV.- If found: "IDs with non-empty and non-zero DV while EVID ≠ 0 and MDV = 0:" plus

IDlist - Otherwise: "No IDs found with DV values for non observation event."

- If found: "IDs with non-empty and non-zero DV while EVID ≠ 0 and MDV = 0:" plus

-

General measurement statistics

Presents summary statistics for each

DVID, showing the number of measurements and percentage of missing values.- Output: "General statistics of the measurements:" plus table with columns

DVID,Total measurements,Measurements with MDV = 1,Missing values %

- Output: "General statistics of the measurements:" plus table with columns

-

Check for positive/negative DV values

Ensures that all measurement values make sense and are consistent in sign (e.g., no negative concentrations if not expected). Exclude rows with

MDV = 1.- Output: "Check for positive/negative observation event:" plus table with columns

DVID,Positive measurements,Negative measurements

- Output: "Check for positive/negative observation event:" plus table with columns

-

Duplicate observations

Identifies duplicated time points per ID for the same

DVID(excluding rows withMDV = 1). Rule: Duplicate (ID,TIME,DVID) combinations withAMT = 0.- If found: "IDs with duplicate measurements:" and table with

ID,TIME,DVID,total measurements - Otherwise: "No IDs found with duplicate measurements."

- If found: "IDs with duplicate measurements:" and table with

-

Repeated DV values across consecutive time points

Flags sequences where the same

DVvalue is repeated in 2 or more consecutive rows, which may suggest imputation or logging errors. ExcludeMDV = 1; check is done perDVID.- If found: "Same value repeated in DV (for 2 or more sequential rows):" plus affected IDs

- If none: "No repeated values in DV (for 2 or more sequential rows)"

These checks serve as a vital step in confirming the consistency and integrity of observational data before modeling, simulation, or visual exploration.

Q1 = first quartile, Q3 = third quartile, IQR = inter-quartile range (Q3 – Q1).

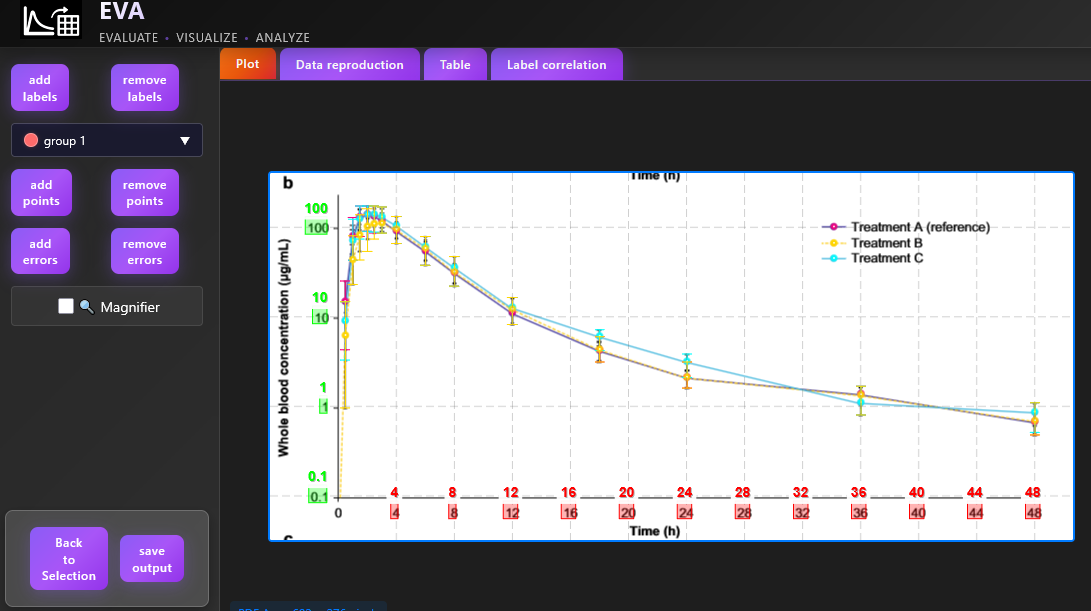

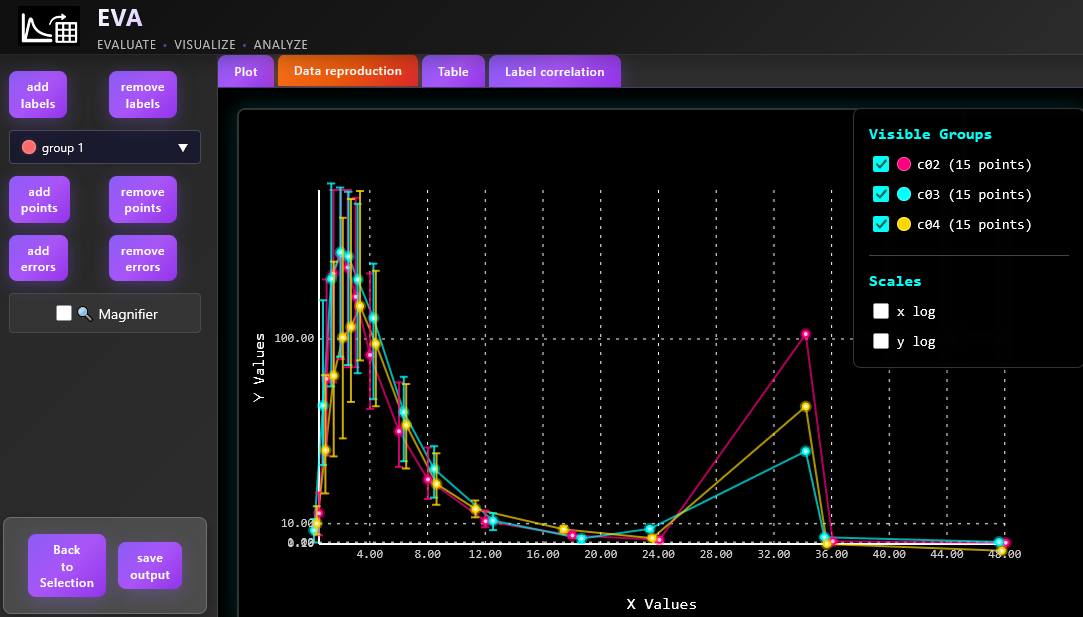

Continuous data

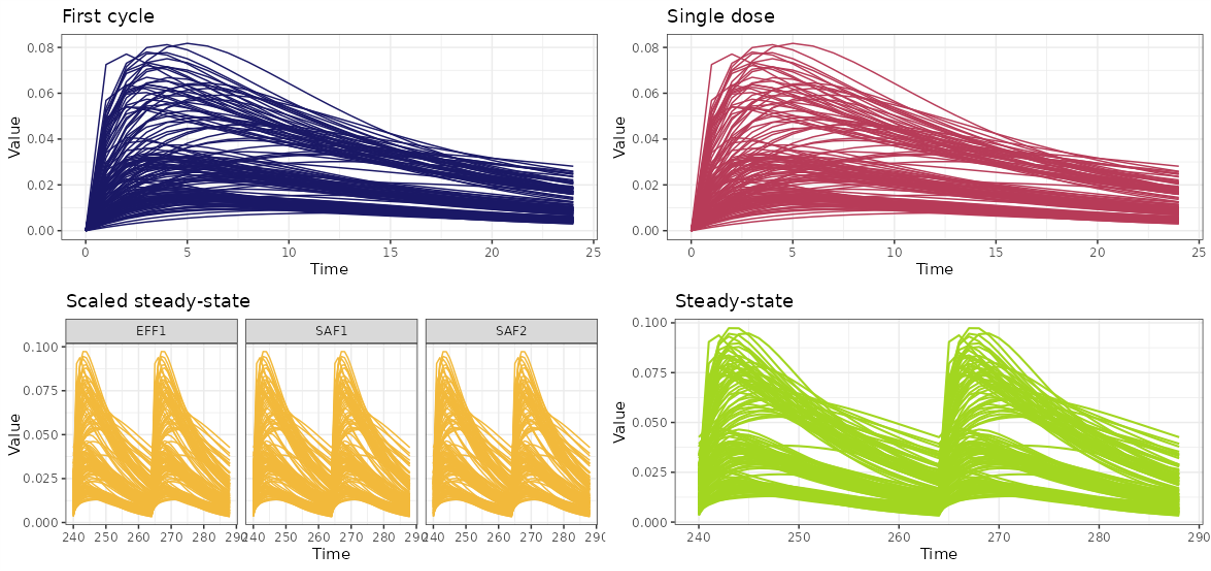

The Continuous data section provides a flexible environment for exploring how the dependent variable (DV) evolves over time. Before any modeling or covariate analysis begins, this section allows users to visually examine patterns, assess variability across subjects or groups. The plots are fully customizable, enabling tailored visualization depending on the user’s objectives.

The section is organized into three distinct tabs depending on the desired visualization:

Certainly! Here's the revised Individual Plots section with the added instructions for generating and saving the plots, integrated seamlessly into the existing style:

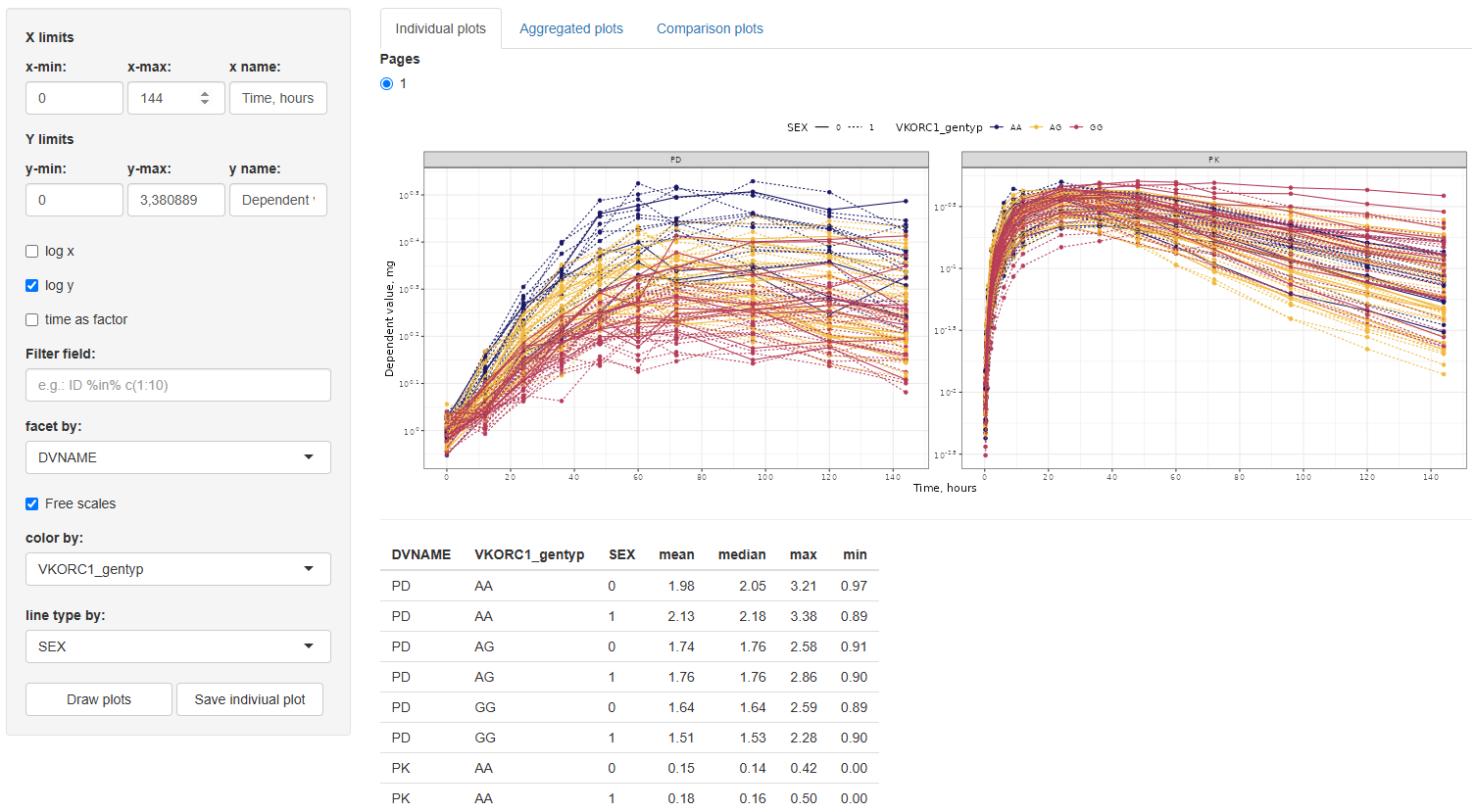

1. Individual Plots

This tab is designed to generate spaghetti plots — individual DV(TIME) trajectories — for exploratory inspection at the subject level. The plot customization options are intended to give the user full control over the graphical output, both aesthetically and analytically.

Available configuration options include:

-

X-axis settings

x-min:Minimum value for the x-axis (TIME)x-max:Maximum value for the x-axisx name:Custom label for the x-axis

-

Y-axis settings

y-min:Minimum value for the y-axis (DV)y-max:Maximum value for the y-axisy name:Custom label for the y-axis

-

Axis transformations

log x:Apply logarithmic transformation to the x-axislog y:Apply logarithmic transformation to the y-axis

-

Additional plot controls

time as factor:Treat time values as categorical (discrete time)Filter:Apply conditional filters to display a data subsetfacet by:Create subplots based on any column in the datasetFree scales:Enable individual y-axis scaling for each facetcolor by:Assign line colors using any column (e.g., treatment group, gender)line type by:Assign line styles based on a selected column (max 5 levels recommended for clarity)

Once all desired configuration options have been selected, click the button to generate the visualizations.

Along with the generated plot, a summary table of descriptive statistics — including mean, median, minimum, and maximum DV values — will automatically be displayed. These statistics are calculated for each group defined by the selected facet, color, and line type options (if any are selected), providing useful context for interpreting trends and patterns in the plotted data.

To save a specific individual plot, use the button. The plot will be saved in the working directory defined earlier in the Data section of the Data Management module.

This section is particularly useful for identifying trends and subject-level behavior before proceeding to more structured or model-based analyses.

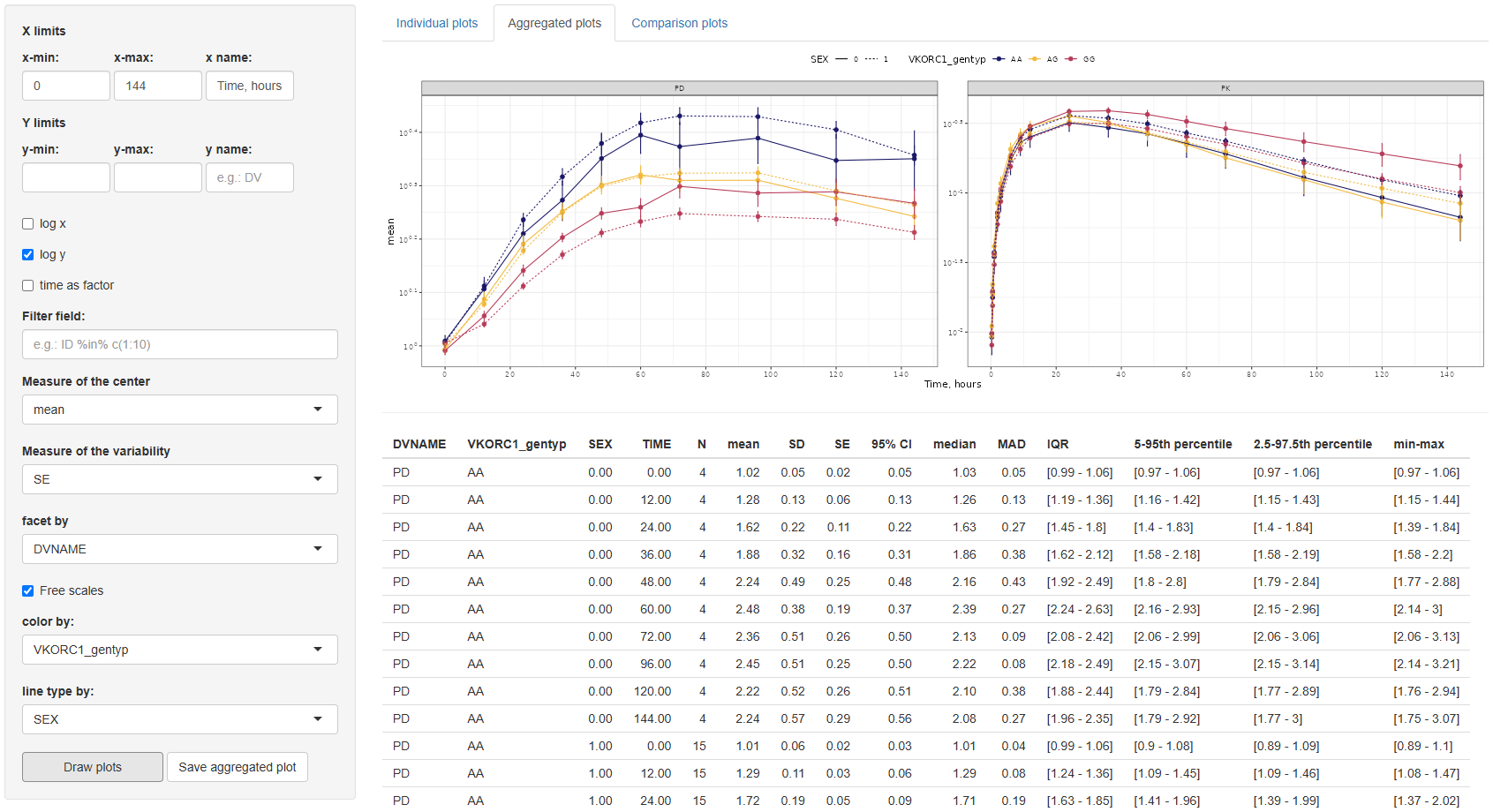

2. Aggregated plots

This tab provides tools for visualizing aggregated trends in the dependent variable (DV) over time, summarizing the data using statistical measures of central tendency and variability.

The configuration interface mirrors that of the Individual plots tab, allowing users to adjust x/y-axis limits and labels, choose log scaling, filter data, facet and color lines by any dataset column, and define line types (limited to variables with ≤5 levels).

Additionally, two new settings are provided:

Measure of the Center:Choose between mean and median.Measure of the Variability:Options depend on the selected center:- For mean: Standard Error (SE), Standard Deviation (SD), and 95% Confidence Interval (CI).

- For median: Median Absolute Deviation (MAD), Interquartile Range (IQR), 5th–95th percentile, and 2.5th–97.5th percentile.

By default, the graph displays the mean with SE as the variability measure.

After selecting the desired configuration, click the button to generate the plot. A

button is also available to export the figure to the working directory specified in the Data section.

In addition to the plot, a summary table of descriptive statistics is automatically generated. This table includes: TIME, N (number of observations), mean, SD, SE, 95% CI, median, MAD, IQR, 5th–95th percentile, 2.5th–97.5th percentile, min–max values. These statistics are reported by each combination of the selected facet, color, and linetype columns.

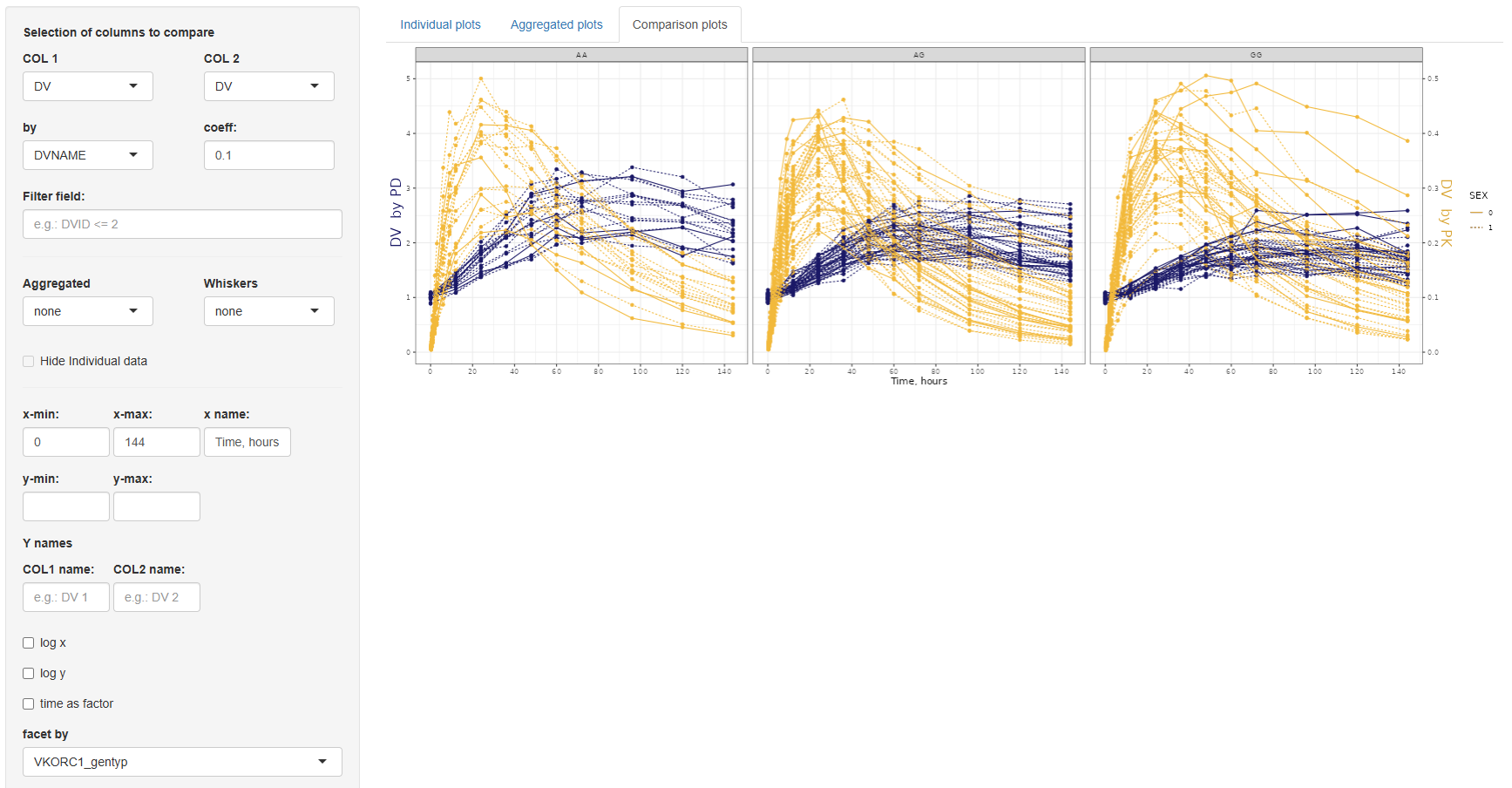

3. Comparison plots

The Comparison Plots tab offers functionality for directly comparing two sets of data in a single time-series plot. This is particularly useful when visualizing changes between groups, treatment arms, or transformations of the same variable. Both series are plotted as a function of time, allowing for clear temporal comparisons.

The configuration panel for this section is structured into three parts:

3.1. Data selection panel

This section defines the variables to be compared and includes the following settings:

COL 1andCOL 2:Selection of columns to compare.by: If the same column is selected in both COL 1 and COL 2, the by field becomes mandatory. This column must have exactly two levels and will be used to split the data for comparison.coefficient:A numeric multiplier applied to the data in COL 2 to enable scaling or adjustment for visualization.Filter field:An optional input to filter the dataset before plotting.

3.2. Aggregation settings

Here, you can specify whether to overlay aggregated trend lines and variability ranges:

Aggregated:Options are none, mean, or median.Whiskers(depending on the selected center):- If mean: none, SE (Standard Error), SD (Standard Deviation), or 95% Confidence Interval.

- If median: none, MAD (Median Absolute Deviation), IQR (Interquartile Range), 5th–95th percentile, or 2.5th–97.5th percentile.

- If none: No options available.

Hide individual data:If selected, only the aggregated lines and variability whiskers are shown, suppressing the underlying individual measurements.

3.3 Axis and layout customization

- COL 1 and COL 2: Independent x-min, x-max, x-name; y-min, y-max settings, and separate y-name for each column.

- Other options: Log-scale for x or y axes, "Time as factor", "Facet by" any dataset column, and "Line type by" for distinguishing groups (limited to variables with ≤5 categories).

Once the configuration is complete, click to visualize the data. The plot can be saved to the selected working directory using the

button.

The Continuous Data module provides a flexible framework for exploring and visualizing dependent variable (DV) values over time. Through its three tabs—Individual Plots, Aggregated plots, and Comparison plots—users can tailor plots to their specific analysis needs, from individual subject-level trajectories to population-level trends and comparative evaluations. With intuitive configuration tools, customizable aesthetics, and accompanying summary statistics, this module streamlines the process of data inspection and graphical analysis in pharmacometric and clinical datasets.

Covariates

The Covariates section of this module provides a comprehensive and flexible interface for exploring the general characteristics of covariates present in the dataset. Designed with ease of use in mind, this section enables automated visualization and statistical summary generation for both continuous and categorical variables, as well as covariate correlations. Through intuitive configuration tools, users can tailor plots and summaries to suit specific analytical needs and preferences.

The interface is divided into three main tabs based on the type of covariate visualization:

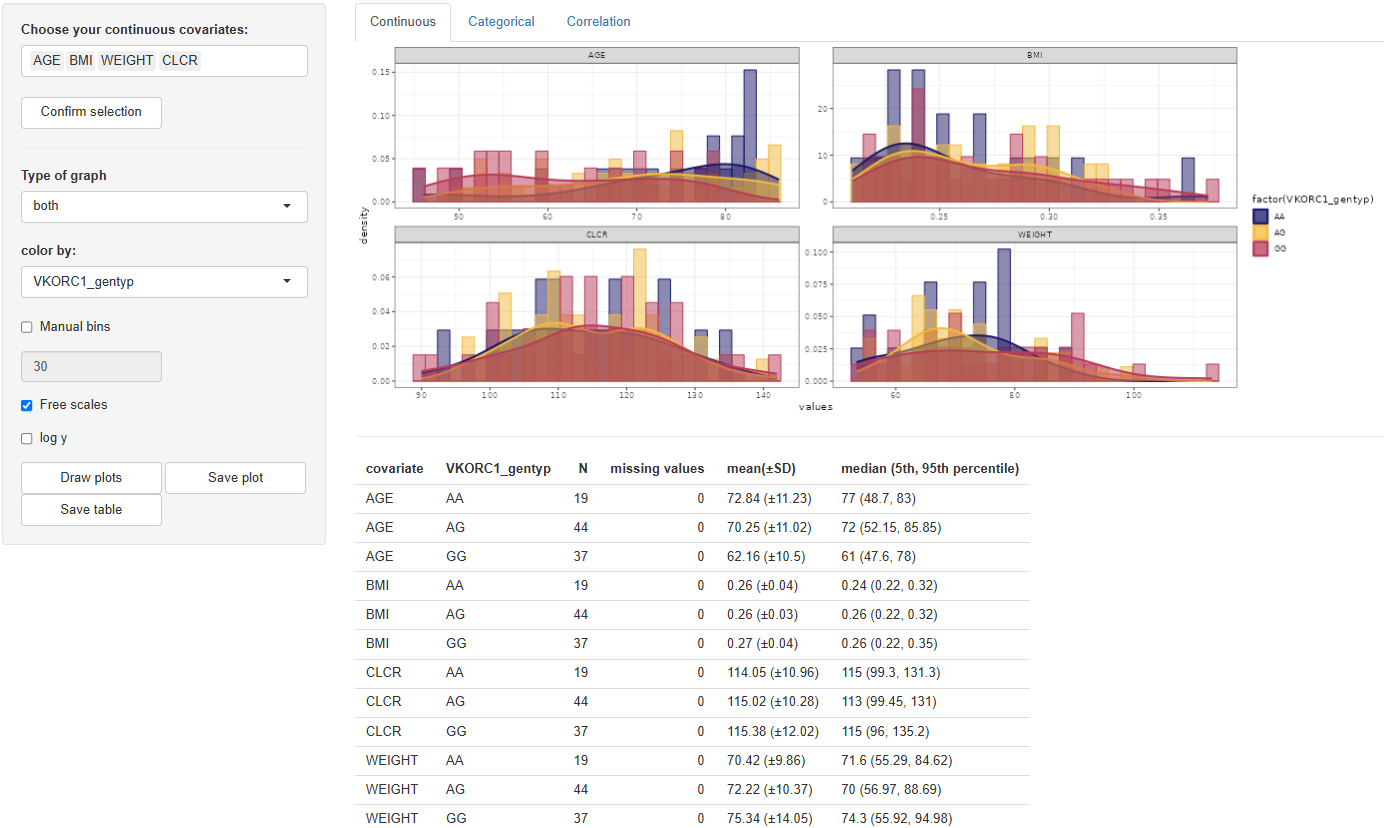

1. Continuous

The Continuous tab enables visualization of the distribution and summary statistics of continuous covariates. Users begin by selecting the desired covariates in the "Choose your continuous covariates:" selection window. After making a selection, clicking activates the configuration panel.

The available plot configuration tools include:

Type of graph:Options are histogram, density, or both (default).Color by:Any column from the dataset or none (“-” by default).Manual bins:Specify the number of histogram bins (default is 30).Free scales:Allows individual scaling per facet.Log y:Enables logarithmic scaling of the y-axis.

Once the configuration is finalized, clicking will generate the corresponding visualization in the main panel.

Accompanying the plot, a table of descriptive statistics is automatically generated. This includes: N, Missing values, Mean (± SD), Median (5th, 95th percentile). If a "color by" variable is selected, these statistics will be grouped accordingly by its levels.

To export the results, use:

to save the graph in the directory defined in the Data section.

to export the statistics table to the same directory.

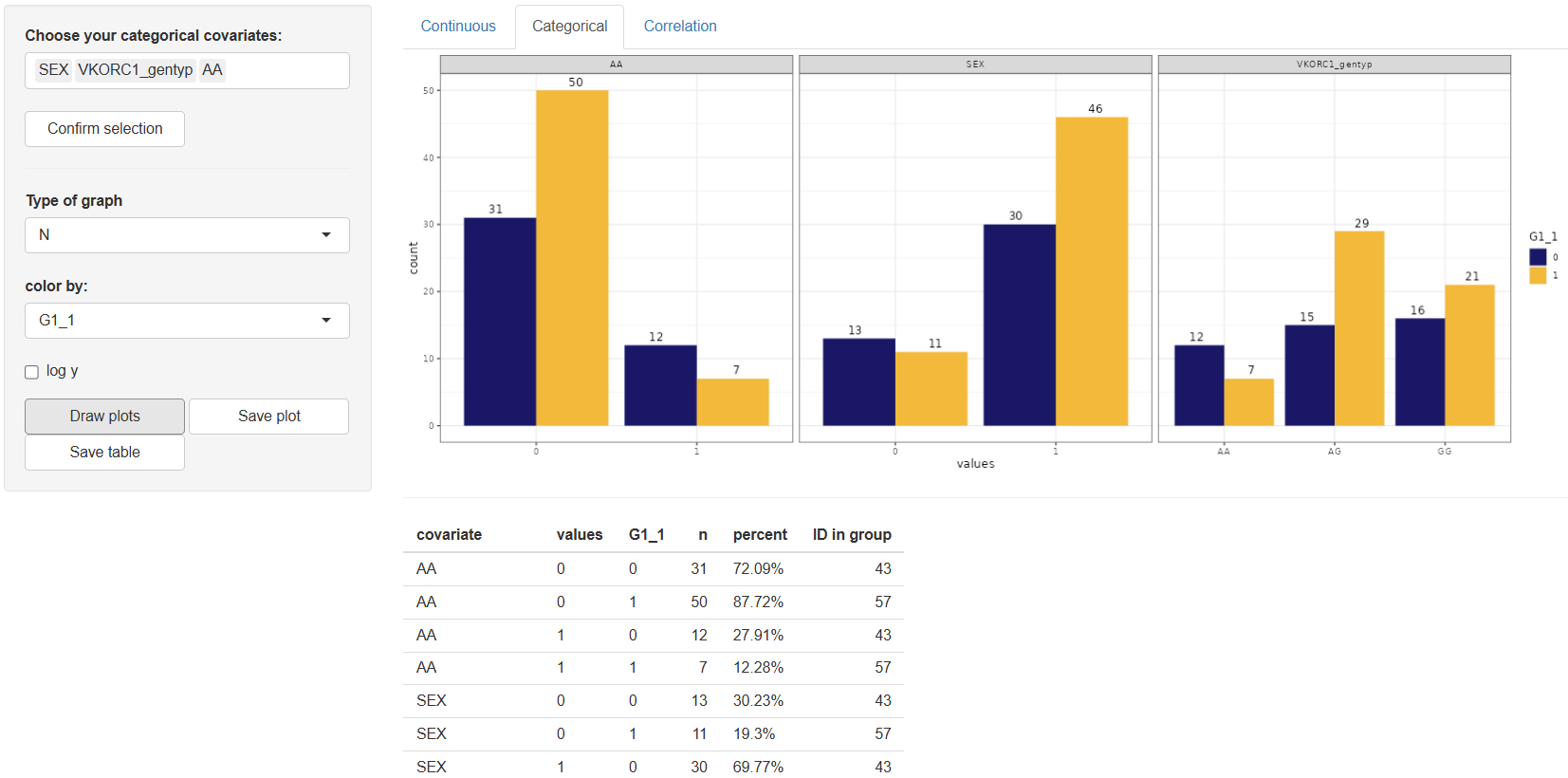

2. Categorical

The Categorical tab is dedicated to the visualization of categorical covariates and offers a user-friendly interface for generating summary plots and descriptive statistics. Work in this tab begins with selecting the desired categorical covariates from the “Choose your categorical covariates:” window. Once the variables are selected, click to proceed.

Upon confirmation, the configuration panel becomes available with the following customizable options:

Type of graph:Options include "N" (count) or "%" (percentage). The default setting is "N".Color by:Allows grouping by any column in the dataset. The default value is "–" (no grouping).Log y:Enables a logarithmic scale on the y-axis for better visualization of skewed distributions.

After adjusting the configuration to your needs, click the button. The resulting plot will be displayed in the main panel, accompanied by a descriptive table. The table includes the following columns for each category:

n– Number of observationspercent– Percentage of observationsID in group– Identifiers belonging to each category (if applicable)

If the color by option is used, statistics will be grouped accordingly.

To save your results, use the button to export the graph and the

button to export the statistics table. Both files will be saved to the working directory specified in the Data section.

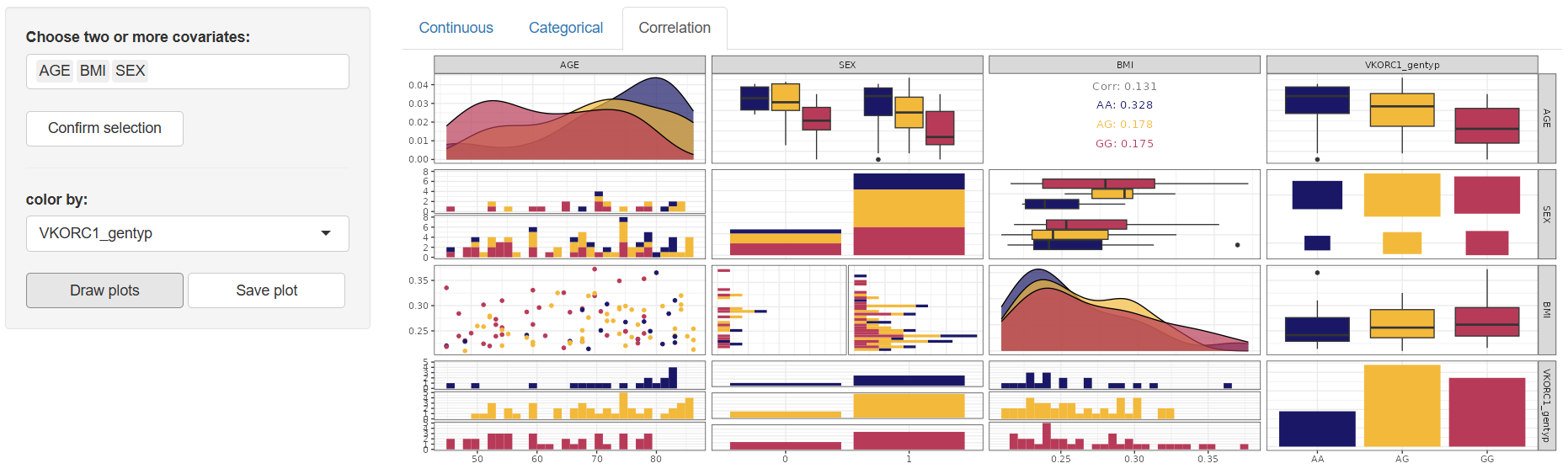

3. Correlation

The Correlation tab is designed to explore the relationships between continuous or categorical covariates. Work in this tab begins by selecting two or more covariates in the “Choose two or more covariates:” window. Once your selection is made, click to activate the configuration panel.

The available configuration tool is:

Color by:Enables grouping the correlation plot by any column in the dataset. The default value is “–” (no grouping applied).

Once the configuration is defined, click to generate the correlation matrix plot in the main panel. This plot visually presents the pairwise correlations between the selected covariates.

To save the output, use the button. The figure will be stored in the working directory defined in the Data section.

The Covariates section offers an intuitive and flexible environment for the graphical exploration and summary of both continuous and categorical variables in the dataset. Complemented by accompanying summary tables and export functions, this section ensures a comprehensive understanding of covariate behavior and structure—an essential step in data preparation and exploration.

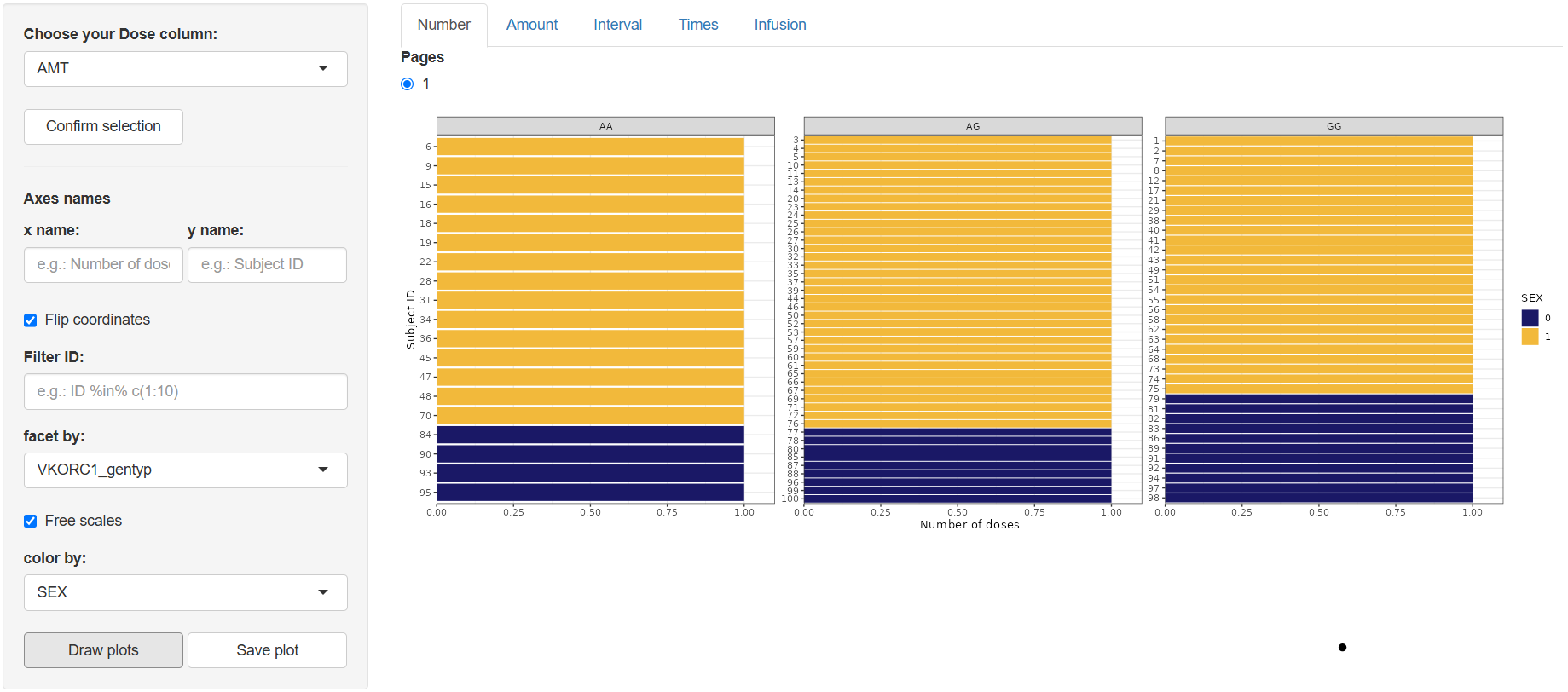

Dosing events

Dosing Events

The Dosing Events section offers an interactive workspace for visualising dose administration patterns across subjects. Whether you need a quick overview of how many doses each participant received, a detailed look at infusion times, or a check on intervals between administrations, this section provides an array of pre-configured plots that can be customised to your needs.

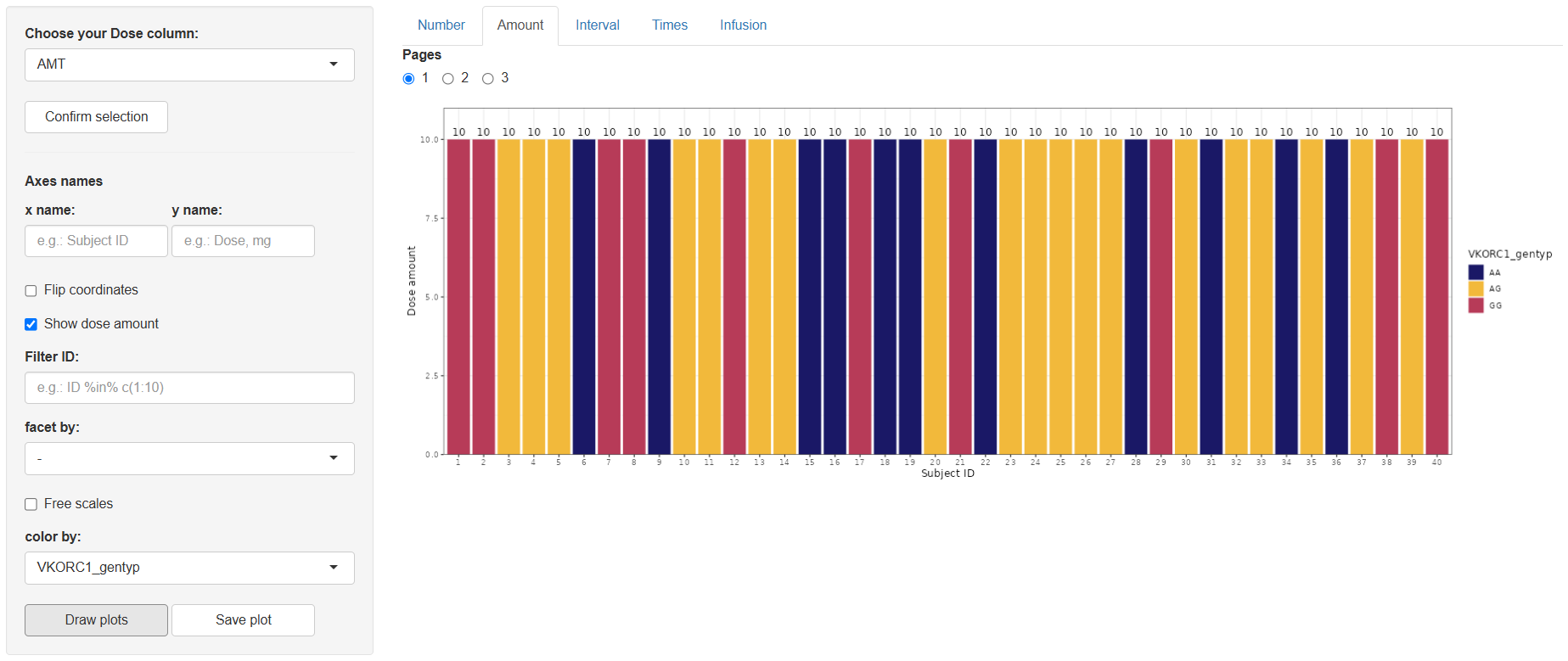

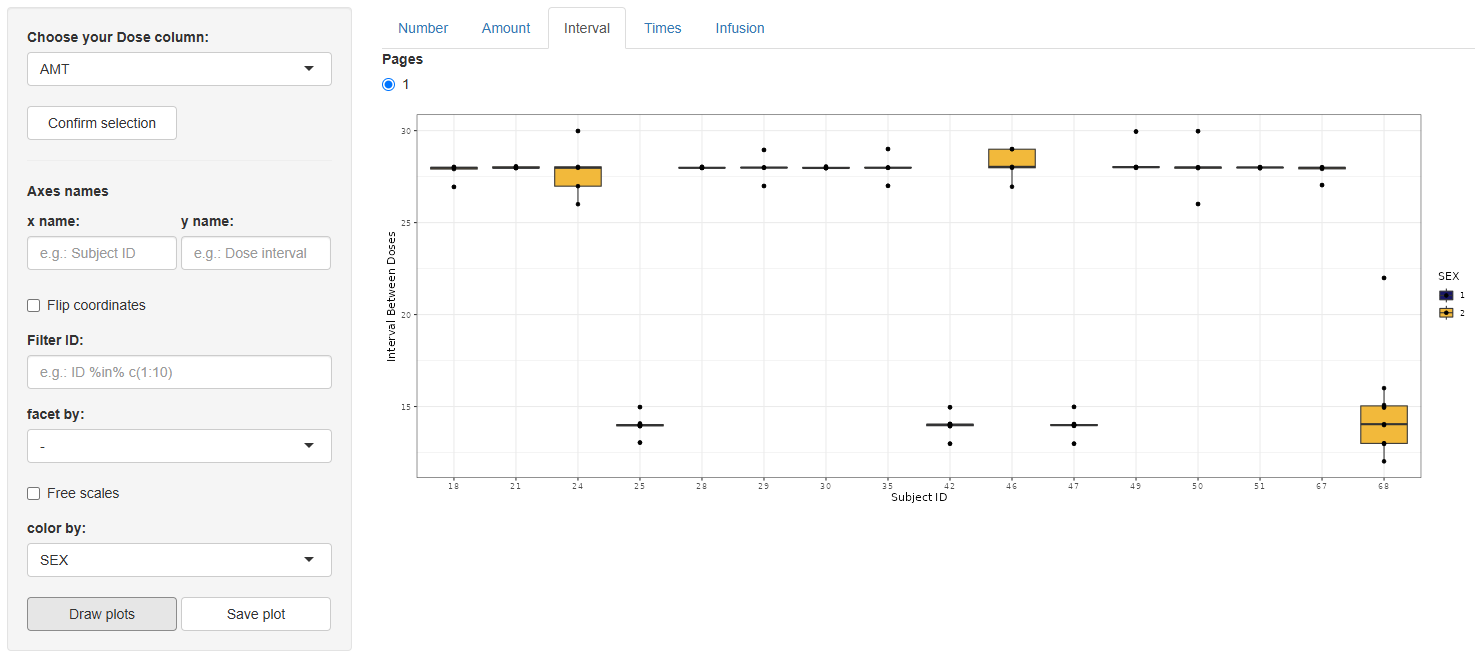

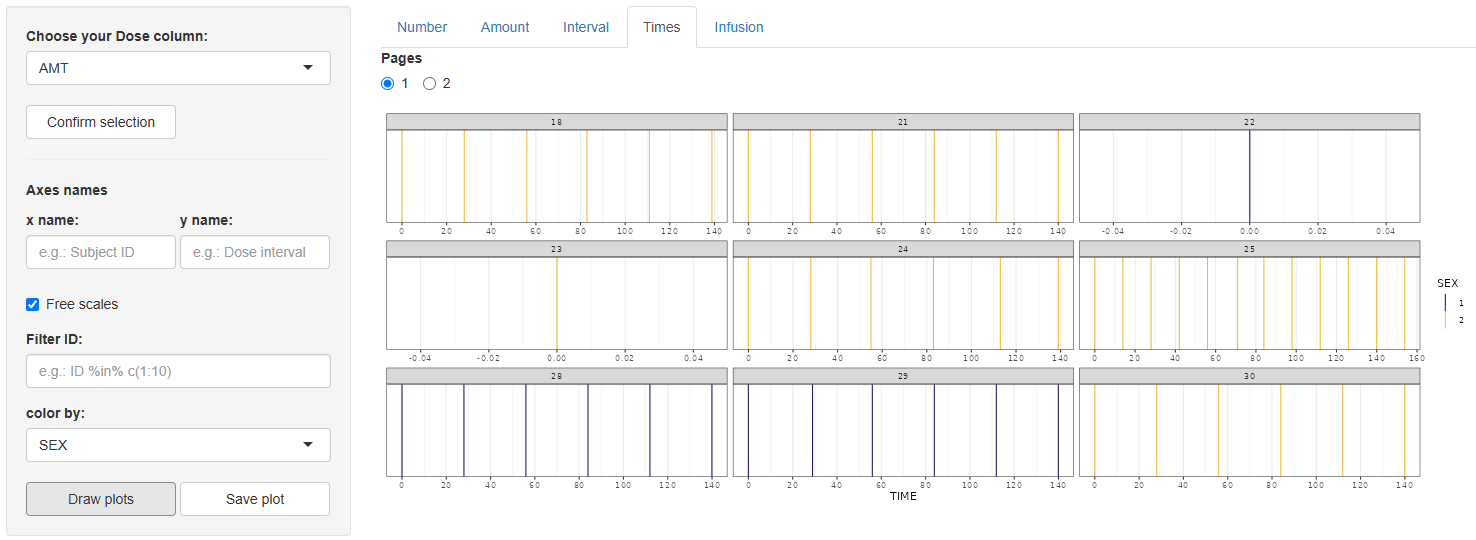

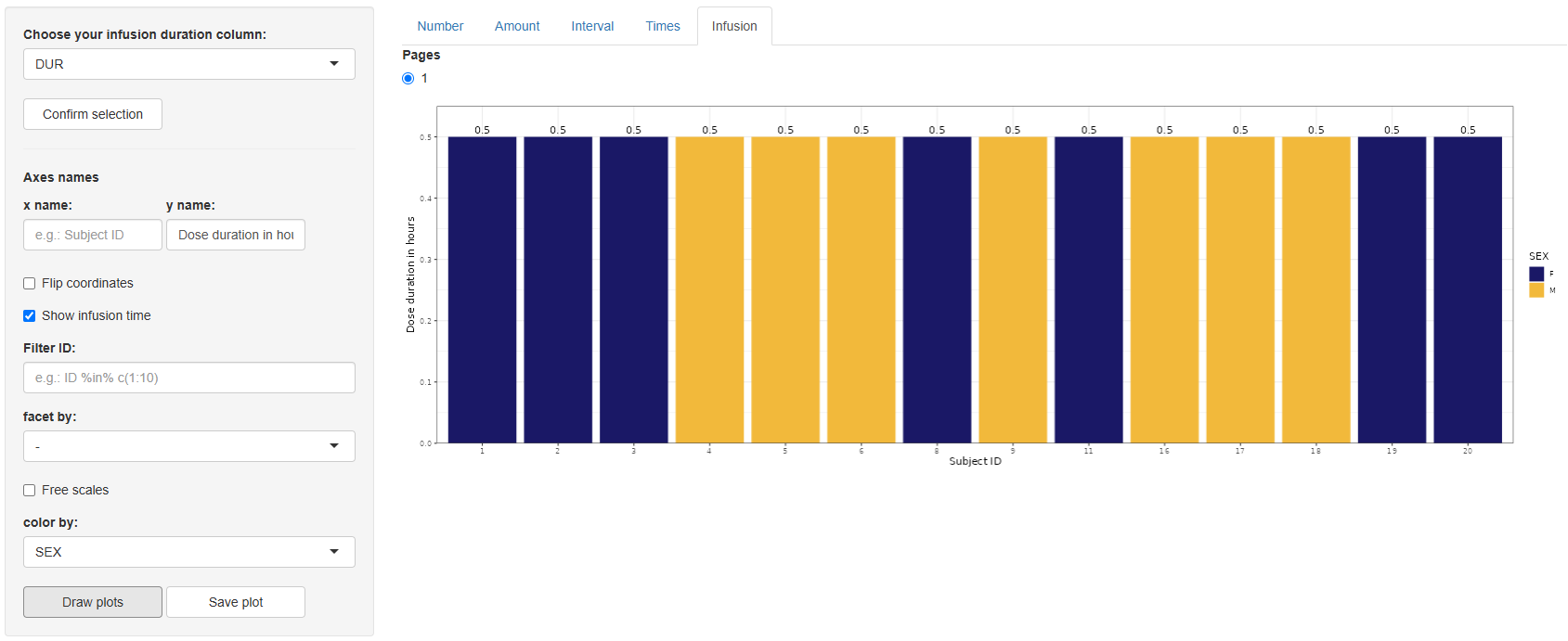

Five tabs are available, each devoted to a specific view of twelve events:

- Number – total doses per subject

- Amount – dose amounts per subject

- Interval – spacing between doses

- Times – actual dosing timestamps

- Infusion – infusion-duration profiles

1. Number (Number of doses)

Select the dose-amount column (default AMT) in “Choose your Dose column:” and click .

Configure the plot:

- Axis names (

x name,y name) Flip coordinates(optional)Filter:Apply conditional filters to display a data subset of subjectsfacet by:Create subplots based on any column in the datasetFree scales:Enable individual y-axis scaling for each facetcolor by:Assign line colors using any column (e.g., treatment group, gender)line type by:Assign line styles based on a selected column (max 5 levels recommended for clarity)

Click . A bar chart appears showing each Subject ID against the number of doses received.

Use to export the figure to the working directory set in Data section.

2 Amount (Dose amount)

Steps mirror the Number tab, with one extra toggle: Show dose amount.

The resulting plot displays dose amounts per subject (optionally overlaid as text if the toggle is on).

Save with .

3 Interval (Interval between doses)

Again select the dose column, confirm, and configure using the same options as the Number tab. This graph is only available for treatments with more than one dose per Subject ID.

On , a box-and-whisker plot appears, illustrating the distribution of inter-dose intervals for every subject.

Save via

.

4 Times (Time of doses)

After selecting and confirming the dose column, a streamlined set of options appears:

- Axis names,

Free scales,Filter ID,color by.

Press . The output is a faceted panel—one facet per Subject ID—containing vertical bars at every dosing time, making shifts in scheduling easy to spot.

Save with .

5 Infusion (Duration of dose)

Choose the infusion-duration column (default DUR) and click .

Configure the plot, identical controls to the Number tab, with one extra toggle: Show infusion time.

Click . A bar chart appears showing infusion durations per subject.

Save with .

The Dosing Events section delivers quick, visually rich insights into dosing schedules, quantities, and infusion characteristics—key information for understanding treatment exposure before modelling. After verifying dosing patterns here, you can proceed confidently to further exploratory analyses or pharmacometric modelling steps.

Tables

The Tables section is designed to facilitate the structured and semi-automated creation of statistical summary tables. It enables users to generate both Descriptive and Inferential statistical outputs using a highly configurable interface, tailored to the user’s specific dataset and analytical goals. Whether summarizing continuous variables, exploring distributions of categorical data, or comparing groups through hypothesis testing, this module provides a comprehensive yet flexible solution for table generation.

The section is divided into two main components, based on the type of statistics to be generated:

1. Descriptive Statistics

Work in this tab begins by specifying the type of data from which statistics will be extracted. This is done through the "Select data type:" window, where the user must choose between Continuous and Categorical. Once the data type is selected, the next window, "Select columns with continuous/categorical data:", allows the user to specify the variables of interest. Clicking the button will then display the configuration panel relevant to the chosen data type.

1.1 Continuous

When Continuous is selected, the configuration panel displays a set of statistical options grouped by type. Users may select one or multiple measures to include in the output table:

-

Measures of central tendency:

MeanMedian

-

Measures of variability around the mean:

Standard Error (SE)Standard Deviation (SD)95% Confidence Interval (95% CI)

-

Measures of variability around the median:

RangeMedian Absolute Deviation (MAD)Interquartile Range (IQR)5th–95th Percentile2.5th–97.5th Percentile

Once the desired configuration is set, click to generate the summary. The resulting table is displayed in the main panel and reflects the selected covariates and statistics.

An additional option in this tab is "Group By:" (default is none). If a grouping variable is selected from the dataset, two Orientation styles become available:

-

Horizontalorientation: Adds the grouping column as a new row-level variable. Each group appears as a separate row, alongside an additional "Overall" row summarizing the full dataset.Figure 3. Descriptive statistics tab displaying the table configuration panel and the resulting table with continuous covariates AGE, WEIGHT, BMI, CLCR grouped by SEX using horizontal orientation.

-

Verticalorientation: Restructures the table with the following columns:Variable(selected covariates)Statistic(selected measures)One column per levelin the group-by variable, with an additionalOverallcolumn

Figure 4. Descriptive statistics tab displaying the table configuration panel and the resulting table with continuous covariates AGE, WEIGHT, BMI, CLCR grouped by SEX using vertical orientation.

1.2 Categorical

If Categorical is selected as the data type, the configuration menu will offer the following options for descriptive summary, which can be selected simultaneously:

-

Number (N)– the absolute count of observations in each category -

Percent (%)– the proportion of observations in each category

Once the desired statistics are selected, click to generate the output. The resulting table will be displayed in the main panel and will include the following columns:

-

Variable– the name of the selected covariate -

Categories– the levels within each covariate -

Number– if selected -

Percent– if selected

An additional feature in this tab is the "Group By:" option (none by default). If a grouping column is selected, the output table will expand to compare the distribution of each categorical variable across the levels of the grouping factor.

In this grouped output, the table retains the Variable and Categories columns. Each additional column corresponds to one of the levels in the selected grouping variable, annotated with the number of subjects in that group. A final column, Overall, provides a summary across all groups.

Use to export the figure to the working directory set in Data section.

2. Inferential statistics

This tab provides the tools to perform correlation analyses between selected variables, offering a straightforward setup for generating inferential statistical tables.

Work in this tab begins by selecting the variables of interest from the available dataset columns. Two separate windows are provided:

- Select Y variable(s):

- Select X variable(s):

Once the variables have been chosen, click the button to display the configuration panel.

The configuration panel offers the following options for setting up the correlation analysis:

-

Correlation type:

PearsonSpearmanKendall

-

Additional outputs:

p-valueConfidence Interval

You may select one or more correlation types and include any combination of the additional outputs. Once the desired configuration is complete, click to generate the results.

If a correlation type other than Pearson is selected along with Confidence Interval, the following informational note will appear in the interface:

Note: Confidence intervals are only available for Pearson correlation.

Use to export the figure to the working directory set in Data section.

The Tables section provides a convenient and flexible way to generate descriptive and inferential statistical summaries. With customizable options and grouping features, it supports quick exploration and clear presentation of both continuous and categorical data—ideal for analysis, reporting, or quality control.

About NLME module

Background

Population PK/PD modeling and its variations are arguably one of the most used types of model-based analyses in MIDD. The development of such models follows a rigid workflow that includes such steps as structural model selection, statistical model selection, covariate search, and forward simulations. Mechanistic, or QSP models, utilize similar functionality, however, with a lot of nuances. For example, covariate search is not typically performed in QSP as relevant covariates ought to be included as a part of the structural model rather than a parameter. At the same time, the QSP approach demands additional set of tools, such as sensitivity analyses, likelihood profiling, or parameter estimation via virtual populations simulation.

Objectives

- Implementation and modification of structural, statistical and covariate models.

- Estimation of unknown parameters within the model using different algorithms and approaches.

- Extensive model diagnostics and evaluation.

- Automatic model development and assessment.

- Model-based simulations.

Sections of the module

- Data

- Model editor

- Model

- Initial estimates

- Task

- Results

- Goodness-of-fit plots

- Covariate search

- Simulations

Abbreviations

PK – Pharmacokinetics PD – Pharmacodynamics QSP – Quantitative Systems Pharmacology MIDD – Model-Informed Drug Development NLME – Nonlinear Mixed Effects

Data

One of the key functionalities of the NLME module is to estimate the parameter values of a model based on observed data, which is typically represented as time series measurements for each individual, study arm, animal, or other experimental setup. Additionally, the relevant data is often linked to drug administration and may include both time-varying and constant independent variables (covariates) that can be incorporated into the model.

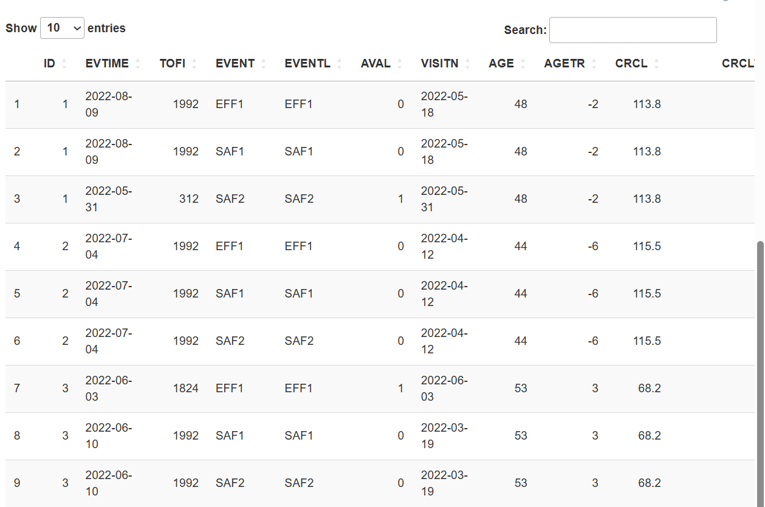

Communication between the data and the model is facilitated by compiling a dataset with a predefined structure, which can be categorized into three types of elements: time series, dosing events, and covariates.

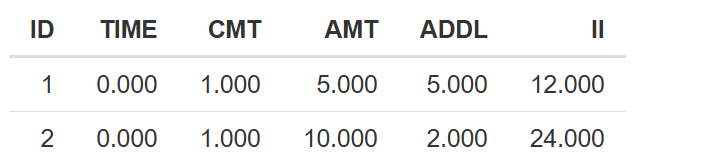

Standardized dataset structure

Standardized datasets in tabulated format accepted by Simurg software are inspired by CDISC guidelines [1] and are compatible with other conventional software, such as Monolix (Lixoft, France) and NONMEM (Icon, USA).

Each line of the dataset should correspond to one inidivudal and one time point. Single line can desribe a measurement, or a dosing event, or both.

Time series

Mandatory columns:

ID- unique identificator of an individual/animal/study arm/experimental setup, typically characterized by unique combination of observations, dosing events and covariates. Can be numeric or character.TIME- observation time. Numeric.DV- observed value of a dependent variable. Numeric.DVID- natural number corresponding to the identificator of a dependent variable.

The user can specify, via the interface, which columns in the dataset correspond to ID and TIME.

Optional columns:

DVNAME- character name of a dependent variable. Should have single value perDVID.MDV- missing dependent variable flag. Equals 0 by default. If equals 1 - observation in the corresponding line is ignored by the software.CENS- censoring flag, can be empty, 0, -1 (for right censoring) and 1 (for left censoring). Value inDVcolumn associated withCENSnot equal to 0 servesa as lower limit of quantification for left censoring or upper limit of quantification for right censoring (relevant for M3 censoring method).LIMIT- ifCENScolumn is present, numerical value inLIMITcolumn will define lower or upper limit of the censored observations (relevant for M4 censoring method).

Dosing events

EVID- identificator of a dosing event. By default equals 0 which corresponds to an observation without any associated events (AMT, etc. are ignored). Other possible values include:- 1 - dosing event.

- 2 - reset of the whole system to initial conditions, with or without dosing event.

- 3 - reset of the associated

DVIDto the value inDVcolumn, with or without dosing event.

CMT- dosing compartment - a natural number corresponding to the running number of a differential equation within a model.ADM- manually assigned administration ID. ReplacesCMTif present. Natural number.AMT- dosing amount. Numeric.II- time interval between the doses. Numeric.ADDL- number of additional doses. Natural number.TINForDUR- duration of infusion. Numeric.RATE- infusion rate. Numeric. ReplacesTINForDURif present.

Covariates

Any additional column in a dataset can be considered as continuous (if numeric) or categorical (if character) covariate, either constant (if covariate value does not change over time within a single ID), or time-varying. Interpolation for the latter is performed via last observation carried forward approach.

Initialization of the dataset

A dataset can be uploaded into the environment by pressing  button and selecting a file with the following extensions:

button and selecting a file with the following extensions: .csv, .txt, .tsv, .xls, .xlsx, .sas7bdat, .xpt.

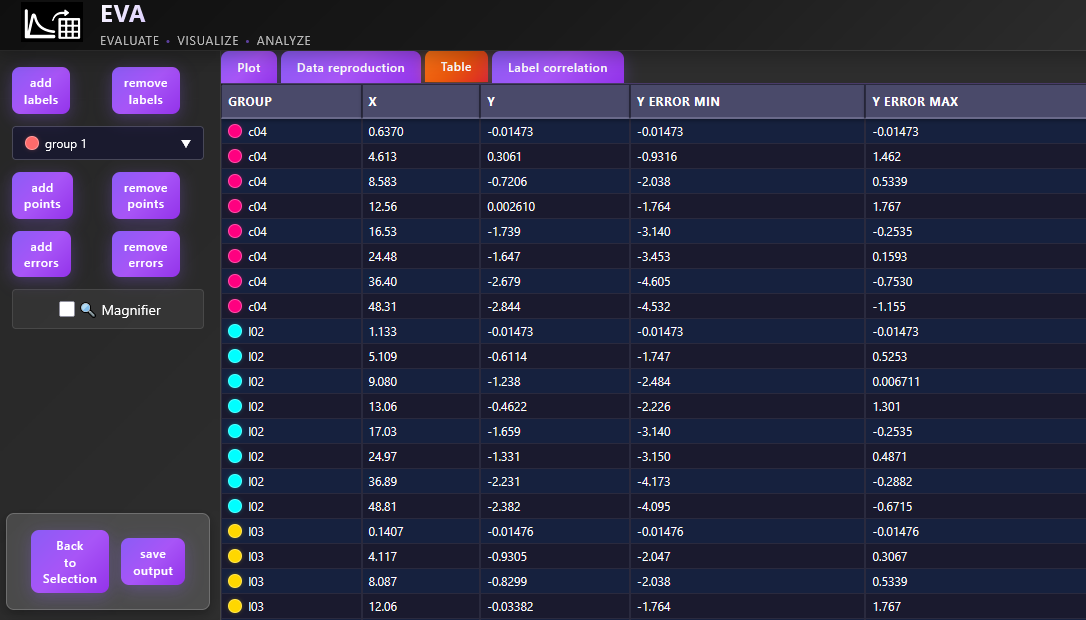

Once a dataset is uploaded, its content will appear in a form of a table on the main panel:

Modifications of the dataset are possible through the Simurg Data management module's Data tab.

Once uploaded, the dataset is recognized by the software and can be used for subsequent model development.

References

[1] https://www.cdisc.org/standards/foundational/adam/basic-data-structure-adam-poppk-implementation-guide-v1-0

Model editor

Model editor tab allows a user to write de novo or modify existing code of a structural model.

Simurg is capable of parsing of various syntaxes, including MLXTRAN and rxode2, in addition to having its own flexible modeling language.

Import of an existing model from an external .txt file can be done by pressing  button.

Created or updated model can be saved to a file using

button.

Created or updated model can be saved to a file using  button.

button.

Essential strucutral elements of Simurg syntax

The only two mandatory sections that need to be present in a structural model file when using Simurg modeling syntax are # [INPUT] and # [MODEL], as shown on the figure:

# [INPUT] contains the names and initial values of parameters to be estimated.

# [MODEL] contains the rest of the code, including fixed parameters, explicit functions, initial conditions, differential equations, etc.

Comments are introduced using # symbol. Thus, sections like ### Explicit functions or ### Initial conditions do not affect parsing and used for sorting the code.

End of the line should be marked with ;.

Syntax for the functional elements

Initial conditions

X(0) = X0, where X is a dependent variable, and X0 can be a number, a parameter, or an explicit function.

Differential equations

d/dt(X) = RHS, where X is a dependent variable, and RHS is the right hand side of a differential equation.

Bioavailability

f(X) = Fbio, where X is a dependent variable, and Fbio can be a number, a parameter, or an explicit function.

Lag time

Tlag(X) = Tlag, where X is a dependent variable, and Tlag can be a number, a parameter, or an explicit function.

Handling of covariates

If an object exists within model structure, but is not designated in # [INPUT], as explicit function, dependent variable or fixed parameter, it will be automatically treated as a covariate. Thus, model parsing at the Model tab will not fail as long as the modeling dataset contains a column with the name matching that of the object.

Example: 2-compartment PK model with first-order absorption

After defining the model, proceed to load it into the Model section.

Model

Import of a structural model from .txt file should be preformed after a modeling dataset was uploaded at the Data tab by pressing  button.

Description of the modeling syntax is provided in Model editor tab.

button.

Description of the modeling syntax is provided in Model editor tab.

Once a model file is uploaded, the content - structural model - will be shown on the main panel and additional fields will pop up to assign variables per DVID (Dependent Variable Identificator):

The number of fields will correspond to the number of unique DVIDs in the dataset. The label for the fields is formed as

DVID#[DVID number from the dataset] ([respective DVNAME from the dataset]).

A user should assign variables to the DVIDs by typing variable name into the respective fields.

Then, the model should be initialized by pressing  button.

button.

Initial estimates

The Initial estimates tab enables users to define and visually evaluate the starting values of fixed-effect model parameters. Providing well-informed initial estimates can significantly improve the speed and stability of the model fitting process.

📌 Note: Before using this tab, make sure both the Data and Model sections have been properly initialized.

Getting Started

To begin, navigate to the Initial estimates tab:

Click the button to import the initial parameter estimates directly from the model file. The values retrieved depend on the modeling syntax used:

-

For models written in Simurg syntax, parameter values explicitly set in the model definition will be retrieved.

-

RxODE syntax, which shares structural similarities with Simurg's syntax, is also supported. If RxODE-style models are used, initial values are read in the same way.

-

For mlxtran syntax, initial values default to 1 unless manually modified.

💡 Simurg is compatible with multiple modeling languages and can interpret both RxODE and mlxtran syntaxes in addition to its own native syntax.

Adjusting and Evaluating Initial Values

Parameter values for fixed effects can be edited using the panel on the right side of the interface. This panel is divided into three functional sections:

1. Parameter Input Panel

In the first section, you can edit the initial values of the fixed-effect parameters as specified in the model. These editable fields allow you to fine-tune the starting estimates before fitting begins.

2. Output Selection Panel

If your model includes multiple outputs (DVIDs), the second section of the panel enables you to choose which output to visualize. This is particularly helpful for models that simulate multiple endpoints or compartments.

3. Plot Configuration Panel

In the third section, you can customize the plot appearance. Options include:

-

Enabling or disabling log scale for the x-axis (time) and y-axis (output)

-

Adjusting the minimum and maximum limits for both axes manually

These controls help ensure the resulting plot is tailored to your data's scale and characteristics.

After adjusting the configuration in these sections, click the  Show plots button. This will display a set of time-profile plots comparing model predictions (based on your current initial estimates) against observed data for each individual in the dataset.

Show plots button. This will display a set of time-profile plots comparing model predictions (based on your current initial estimates) against observed data for each individual in the dataset.

This interactive evaluation step helps you visually assess whether your initial estimates are plausible before proceeding with model calibration.

Resetting and Proceeding

To revert changes and restore the original parameter values from the model file, simply click the button again.

Once you're satisfied with the initial estimates, proceed to the Task tab to configure statistical components. These initial values will be used as starting points for model fitting.

Task

The "Task" section provides tools to initialize and manage the statistical components used during the model calibration process.

Work in this tab begins by clicking the button. This sets the path to a folder where the configuration of statistical components and the results of model fitting will be stored.

You may select either a new (empty folder) directory or one that contains results from a previous fitting session. If the directory already contains results, you can skip earlier steps (e.g., Data, Model, or Initial estimates) and move directly to task section.

After selecting the working directory, four main options become available:

-

loads previously saved fitting results from the selected directory. Once loaded, you can proceed to tabs like Results, GoF plots, or Simulations to evaluate or utilize the fitted model.

-

loads a previously saved configuration of the statistical components. After loading, select the fitting algorithm (e.g., Simurg, Monolix, or nlmixr) and proceed to

.

-

cleans the directory if it contains files and opens a list of options for configuring the statistical components. This option requires that the Data, Model (or Model editor), and Initial estimates tabs have already been properly initialized.

-

deletes all contents from the selected directory, allowing you to start fresh with a new statistical component configuration.

Creating statistical model

The process of creating a statistical model in the "Task" tab is divided into four key components:

1. Residual error model

In pharmacometric modeling, the residual error model captures the unexplained differences between observed data and model predictions — those not accounted for by the structural model or inter-individual variability.

Simurg offers several residual error model options for each specified DVID, including:

-

Constant error (independent of the predicted value): $$ y = f + \epsilon, \epsilon ∼ N(0, a^2)$$

-

Proportional error (increases proportionally with the predicted value): $$ y = f \cdot (1 + \epsilon), \epsilon ∼ N(0, b^2)$$

-

Combined1 error (constant + proportional): $$ y = f + \epsilon, \epsilon ∼ N(0, a^2 + (b·f)^2)$$

Here, \(f \) is the predicted value, and \( a \) and \( b \) are estimated error parameters.

The fields for selecting the residual error model and its parameters look like this:

Additionally, you can specify how BLOQ (Below Limit of Quantification) data are handled. Available methods include:

- M3: BLOQ data points are treated as left-censored values.

- M4: A hybrid method where:

- BLOQ values before the first quantifiable observation are treated as censored

- BLOQ values after are treated as missing (ignored)

These options are only available if your dataset (initialized in the Data tab) contains the required columns:

- For M3:

CENS - For M4:

CENSandLIMIT

If these columns are not present, the default handling method is "none".

2. Parameter definition

This section allows you to define the characteristics of model parameters during the fitting process (Figure 1(a)). Specifically, you can determine:

-

Whether a parameter is fixed or includes random effects

-

The distribution type used to model the random effects

📌 Note: The distribution settings apply to random effects, not the fixed effect estimates themselves.

Available distributions in Simurg include:

| Distribution | Formula |

|---|---|

| Normal | \( P_i=\theta +\eta_i, \space\space \eta ∼ N(0,\omega^2)\) |

| Lognormal | \( P_i=\theta + \exp(\eta_i), \space\space \eta ∼ N(0,\omega^2)\) |

| Logit-normal | \( P_i=\frac{1}{1+\exp(-(\theta+\eta_i))}, \space\space \eta ∼ N(0,\omega^2)\) |

where \(\theta\) is the typical value of a parameter, \(\eta_i\) the random effect for individual \(i\), and \(\omega\) is the standard deviation of \(\eta\).

In addition, you can specify initial values for random effects and their correlations using the matrix provided on the right-hand side of the interface (Figure 1(b)). This matrix allows for the configuration of:

- Variances – Initial guesses for \(\omega^2\), representing the variability of each random effect.

- Correlations – Initial values for the correlations between random effects (typically set to 0 unless prior knowledge suggests otherwise).

Matrix structure:

- Diagonal elements represent the initial values for the standard deviations of the random effects (i.e., \(\omega^2\)).

- Off-diagonal elements define the initial correlations between the corresponding random effects.

These initial values can influence the convergence behavior of the fitting algorithm, so it's recommended to use reasonable estimates when available.

3. Covariate model

This section allows you to introduce covariate effects into the model, enabling more personalized and accurate parameter estimation based on individual-specific characteristics from the dataset.

The interface looks like this:

To add a covariate effect:

1. Select the parameter you want the covariate to influence.

2. Choose the covariate from the list (the name must match a column in the initialized dataset).

3. Specify the covariate type:

-

Categorical: Define the reference category, which serves as the baseline level for comparison.

-

Continuous: Choose both a function to describe the covariate relationship and a central tendency transformation (mean or median) to normalize the covariate.

Functions for Continuous Covariates

Simurg provides several functional forms to model continuous covariate relationships:

- Linear (lin): $$\theta_i = \theta_{ref} \cdot (1+\beta \cdot (x_i-x_{ref}))$$ A direct linear relationship between the covariate and the parameter.

- Log-linear (loglin): $$\theta_i = \theta_{ref} \cdot \exp(\beta \cdot (x_i-x_{ref}))$$ A multiplicative effect, useful when the effect increases or decreases exponentially.

- Power model: $$\theta_i = \theta_{ref} \cdot \left( \frac{x_i}{x_{ref}} \right) ^\beta $$ A flexible model that can capture nonlinear proportional effects, often used in allometric scaling.

Where \(\theta_i\) is the individualized parameter estimate, \(\theta_{ref}\) is the parameter value at the reference covariate value \(x_{ref}\), \(\beta\) is the estimated covariate effect, \(x_{i}\) is the individual's covariate value.

You can choose whether \(x_{ref}\) is based on the mean or median value of the covariate in the dataset.

4. Specify the initial value for the parameter associated with the reference category (for categorical covariates) or the normalized value (for continuous covariates).

5. Click "Set" to apply the covariate effect to the selected parameter.

Once all configurations are complete, click the button. This action saves the statistical model setup—defined in the previous sections to the selected working directory.

4. Optimization options

📌 Options in this section will become available only after the control object is created.

After the control object has been successfully created:

Select the fitting algorithm you wish to use (e.g., Simurg, Monolix, or nlmixr).

Click to begin the model fitting process.

When the fitting is complete, you can move on to the Results tab to analyze the output and evaluate the model's performance.

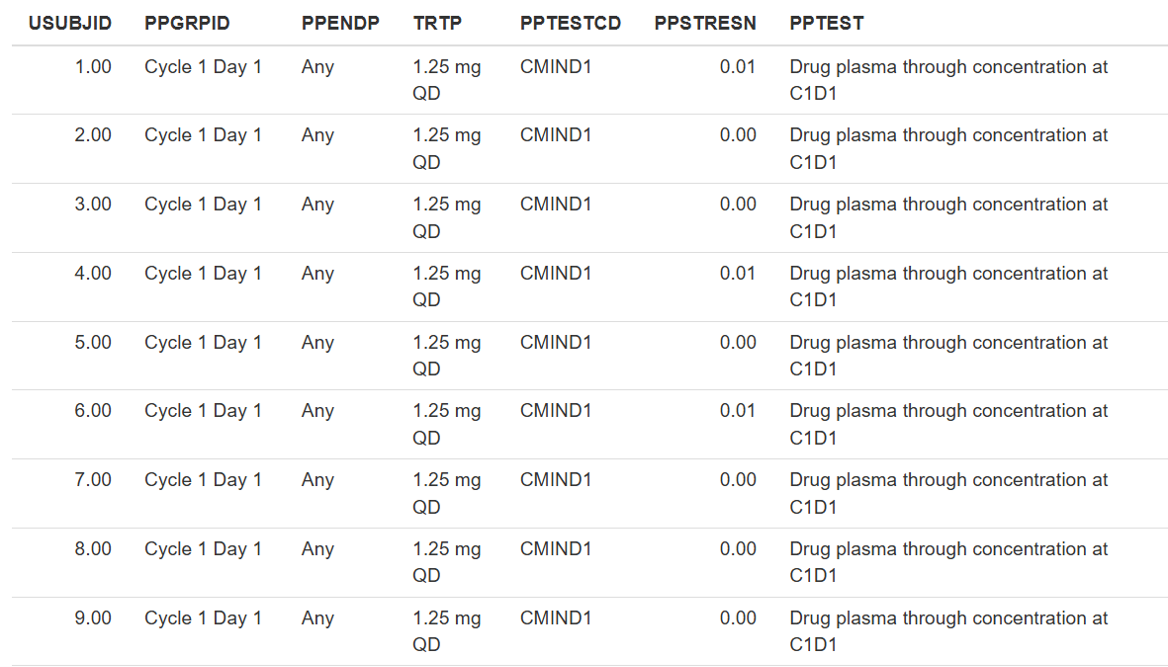

Results

Essential output of a model calibration procedure includes several numerical characteristics and scores, such as:

- point-estimates of population parameter values;

- standard deviation (SD) of random effects;

- eta-shrinkage;

- standard errors (SE) for all parameters;

- individual parameter values if random effects are present in the model;

- correlation between parameters;

- likelihood-based numerical criteria.

To extract this infromation from a modeling project, either a calibration procedure should be performed or the results of a calibration procedure should be loded following the instructions for the Task section. Once it is done, Results section in NLME can be accessed:

and relevant output can be generated by pressing button.

Generated output is spread across four tabs:

After "View model results" button is pressed, button will appear below it. By pressing this button all figures and tables from all four tabs will be saved to location of the current project within Simurg environment.

In addition, button, available on the first 3 tabs, allows to export figures or tables from a tab to local computer.

1. Summary

Summary tab contains essential information in a form of a summary table on model parameters obtained after a calibration procedure:

Parameter names are shown exactly as specified in the structural model.

Covariate coefficients are named using the following principle:

[parameter name]_[covariate name]_[transformation flag]

Residual error model parameters are assigned as follows:

[variable name]_[a - for additive component; b - for proportional component]

\(SE\) of the parameters are calculated in three steps.

First, variance-covariance matrix is calculated for transformed normally distributed parameters from the Fisher Information Matrix (FIM) as follows:

$$ C(\theta)=I(\theta)^{-1} $$

Next, \( C(\theta) \) is forward-transformed to \( C^{tr}(\theta) \) using the formulas to compute the variance, dependent on the distribution of the parameters:

- For normally distributed parameter: no transformation applied.

- For log-normally distributed parameters: $$ SE(\theta_k)=\sqrt{( \exp(\sigma^2)−1) \cdot \exp(2\mu + \sigma^2)} \\ \mu = \ln(\theta_k) \\ \sigma^2 = \operatorname{var} (\ln (\theta_k)) $$

- For logit-normally distributed parameters: a Monte Carlo sampling approach is used. \(100000\) samples are drawn from the covariance matrix in gaussian domain. Then the samples are transformed from gaussian to non-gaussian domain. Then the empirical variance \( \sigma^2 \) over all transformed samples \( \theta_k \) is calculated.

Finally, \(SE\) of the estimated parameter values is calculated from the diagonal elements of the forward-transformed variance-covariance matrix: $$ SE(\theta_k) = \sqrt{C^{tr}_{kk}(\theta_k)} $$

Relative standard error (\(RSE\)) is calculated as \( \frac{SE}{Estimate} \cdot 100 \% \).

Cases with \(RSE > 50 \% \) are highlighted in red, as \(RSE > 50 \% ( \frac{1}{1.96} * 100 \% ) \) corresponds to the situation where \( 95 \% \) confidence interval of \( N(0, 1) \) includes zero, making respective parameter not statistically different from zero with \( \operatorname{p-value} = 0.05 \).

Random effects column contains \(SD\) of the estimated random effects \( (\omega) \).

\( \eta \)-shrinkage is calculated based on the following equation: $$ \eta \space shrinkage = 1 - \frac{SD(\eta_i)}{\omega} $$ \( \eta \)-shrinkage exceeding \( 30 \% \) is indicative of unreliable individual parameter estimates and warrants the revision of a statistical model [1].

2. Individual parameters

This tab contains a single table with individual parameter values defined as the mean of conditional distribution for parameters with random effects and as typical parameter values for the parameters without random effects.

3. Correlations

Correlation matrix is derived from the variance-covariance matrix as:

$$ \operatorname{corr}(\theta_i, \theta_j) = \frac{C^{tr}_{ij}}{(SE(\theta_i)*SE(\theta_j))} $$

and is represented visually in a form of a heatmap, where the value and color in each cell represents Pearson's correlation coefficient (blue - for negative values, red - for positive values).

4. Likelihood

This tab contains likelihood-based numerical scores used to benchmark models:

- \( -2 \cdot \log(\operatorname{Likelihood}): n \log(2\pi)+\sum(\log(\sigma_j^2 ) + \frac{(Y_j-Y^*_j (t,\Theta))^2}{\sigma_j^2}) \)

- Akaike information criterion: \( AIC = -2LL + 2 \cdot P \)

- Bayes information criterion: \( BIC = -2LL + P \cdot \log(N) \)

where \( P \) is the number of estimated parameters within the model; \(N \) is the number of data points.

N.B.: likelihood cannot be computed in a closed form if random effects are present in the model.

Model comparison

"Likelihood" tab allows to perform semi-automatic model comparison across multiple projects, located within the same folder of the currently active project by pressing , selecting the subset of projects to include into the analysis (optional), and pressing

button.

For example, running model comparison given the following folder structure:

parent-folderWarfarin_PKPD_1Warfarin_PKPD_2- current projectWarfarin_PKPD_3Warfarin_PKPD_4Warfarin_PKPD_5Warfarin_PKPD_6

where Warfarin_PKPD_1 ... Warfarin_PKPD_6 are successfully converged computational projects, will provide user with the following table:

By indicating character string in the field, for example,

project1, will leave only those projects in the table that contain this string within their names.

Goodness-of-fit (GoF plots)

The GoF plots tab provides a suite of graphical tools to assess how well the model fits the observed data. These diagnostic plots help visually evaluate model performance, detect systematic bias, identify outliers, and uncover potential model misspecification.

To use this section, the model must first be fitted or previously generated results must be loaded, following the steps outlined in the Task section. Once this is done, the GoF plots section in NLME becomes accessible:

Getting Started

To begin, click the button. This action loads the model results stored in the Task section and activates the available plot menus. From there, you can create diagnostic plots based on your chosen configuration.

Once you’ve configured the desired settings, click the

button to generate the plot.

The resulting plot can be downloaded by clicking the

button for further analysis or reporting.

Available Plot Types

This section offers eight types of diagnostic plots, organized into the following tabs:

- Time Profiles

- Observed vs. Predicted

- Residuals

- Distribution of Random Effects (RE)

- Correlation between RE

- Individual parameters vs. covariates

- VPC (Visual Predictive Check)

- Prediction distribution

1. Time Profiles

The Time Profiles tab provides tools for visually evaluating how well the model fits the observed data over time, both at the population level and the individual level, for the selected output type.

The available output types are determined by the DVIDs (Dependent Variable Identifiers) specified in the Model section.

Plot Configuration Options

You can customize the plot using the following options:

-

Fit type to display: Choose whether to show the population predictions, individual predictions, or both on the plot

-

Axis settings:

- Manually adjust the x- and y-axis limits

- Enable or disable logarithmic scaling for either axis

2. Observed vs. Predicted

The Observed vs. Predicted tab allows you to assess how well the model predicts the observed data by comparing predicted values against actual observations. This comparison can be made at both the individual and population levels.

The available outputs correspond to the DVIDs selected in the Model section.

Plot Configuration Options

You can customize the plot using the following settings:

- Prediction Type: Choose to display Individual predictions, Population predictions, or both

- Log Axes: Enable logarithmic scaling on the x- and/or y-axes for better visualization of wide value ranges

- Spline Overlay: Optionally add a spline to the plot to highlight trends or deviations from the ideal fit line

3. Residuals

The Residuals tab provides diagnostic plots to evaluate the distribution and behavior of residuals, helping to detect model misspecification, bias, or heteroscedasticity.

The outputs available for plotting correspond to the DVIDs selected in the Model section. You can choose to visualize individual or population residuals.

Plot Types

This tab includes two types of plots:

3.1 Scatter Plot

This plot displays residuals versus time or predicted values to detect patterns or trends that may indicate issues with model fit.

Configuration options:

- Log scale for time axis – Apply logarithmic transformation to the time axis.

- Log scale for predicted values axis – Enable log scale for the x-axis when plotting residuals vs. predicted values

- Spline – Overlay a spline curve to visualize trends or systematic bias

- Axis limits – Manually define y-axis limits for better control over the plot view

3.2 Histogram

This plot shows the distribution of residuals to assess normality and variability.

Configuration options:

- Density curve – Overlay a smoothed density curve on the histogram

- Theoretical distribution – Compare the residuals to a theoretical normal distribution

- Information – Include a p-value from a statistical test (e.g., Shapiro-Wilk) to assess the normality of residuals

4. Distribution of Random Effects (RE)

The Distribution of Random Effects (RE) tab allows you to explore the variability captured by the model’s random effects and individual parameter estimates. This helps assess the assumption of normality and the behavior of random components in the model.

Begin by selecting the type of output you want to visualize:

4.1 Individual Parameters – Estimated parameter values for each individual.

4.2 Random Effects – Deviations from the population parameters (i.e., the modeled random components).

4.1 Individual Parameters

For Individual Parameters, only histograms are available.

Plot options:

- Select parameter names – This dropdown automatically lists all parameters associated with random effects. You can select all, or a subset, to include in the plot

- Density Curve – Overlay a smooth density curve on the histogram

- Information – Show the p-value from a normality test to assess the distribution

4.2 Random Effects

For Random Effects, you can choose between two plot types:

4.2.1 Histogram Visualizes the distribution of random effects for each selected parameter.

Options include:

- Select parameter names – A list of available omega terms (random effects) is automatically populated

- Density Curve – Add a smooth density overlay

- Theoretical distribution – Compare the empirical distribution with a standard normal distribution

- Information – Include p-value results of a normality test (e.g., Shapiro-Wilk)

4.2.2 Boxplot Displays the spread and central tendency of selected random effects using boxplots.

5. Correlation Between RE

The Correlation Between RE tab allows you to explore pairwise relationships between individual parameter estimates or random effects, helping to identify potential correlations or dependencies that might inform model refinement or covariate modeling.

Start by selecting the type of correlation plot you want to generate:

5.1 Individual Parameters – Scatter plots showing relationships between estimated parameters for each individual.

5.2 Random Effects – Scatter plots of the omega terms (random deviations from the population parameters).

5.1 Individual Parameters

Configuration options:

- Select parameter names – A list of model parameters associated with random effects is automatically populated. Select two or more to include in the plot

- Linear regression – Optionally overlay a regression line to visualize the trend

- Information – Display the Pearson correlation coefficient (r) to quantify the strength and direction of the relationship

5.2 Random Effects

Configuration options are the same as for Individual Parameters:

- Select parameter names – The dropdown provides a list of omega terms for parameters with random effects. Choose the ones you'd like to analyze

- Linear regression – Add a regression line to the scatter plot

- Information – Show the Pearson r value to assess correlation strength

6. Individual parameters vs. covariates

The Individual Parameters vs. Covariates tab enables exploration of potential relationships between individual parameter estimates or random effects and covariates in the dataset. This analysis is useful for identifying covariate effects that could be included in future model refinements.

Start by choosing the type of output to visualize:

Individual Parameters – Displays estimated parameter values per individual against selected covariates.

Random Effects – Shows the corresponding omega values plotted against covariates.

6.1 Individual Parameters

Configuration options:

- Select parameter names – Choose one or more individual parameters associated with random effects from the automatically populated list.

- Select covariates names – Choose the covariate (column from your dataset) to plot against the selected parameters.

- Linear regression – Optionally overlay a linear regression line to visualize potential trends.

- Information – Display the Pearson correlation coefficient (r) to quantify the relationship with continuous covariates or p-value in case of categorical covariates.

6.2 Random Effects

Configuration is identical to the Individual Parameters option, with one difference:

Select Parameter Names – This dropdown lists omega terms corresponding to the random effects.

You can still:

- Select a covariate,

- Add a linear regression line,

- And show the Pearson correlation coefficient or p-value.

7. VPC (Visual Predictive Check)

The Visual Predictive Check (VPC) tab provides powerful graphical diagnostics to evaluate how well the model predicts the distribution of observed data. It helps detect model misspecifications, assess variability, and ensure predictive performance across different covariate groups.

Getting Started

First, click the button to load the prediction results from the fitted model.

Configuration Options

- Select Output Choose the output variable you wish to analyze. Available options depend on the DVIDs defined in the Model section.

Stratification Options

- Stratification Column

Select a dataset column for stratification:

- If a categorical column is chosen, separate facets will be created for each category level.

- If a continuous column is selected, you can specify:

- The number of facets (choose between 2 and 4)

- The ranges to define each facet

Binning Options

-

Binning Method – Choose how the data will be binned along the x-axis:

- kmeans – Data-driven binning that clusters points based on similarity

- ntile – Splits the data into equal-sized groups based on percentiles

- equal_x – Divides the x-axis into equally spaced intervals

-

Number of Bins – Select how many bins to display in the VPC plot

Prediction Options

-

Observed Percentiles – Choose which percentiles of observed data to display:

- 10%, 50%, 90%

- 5%, 50%, 95%

-

Confidence Interval (CI) – Select the confidence interval for the prediction bands:

- 50%, 90%, 95%, or 99%

-

Prediction Correction – Enable this option if needed to correct for time-dependent variability in predictions

Display Options

Customize which visual elements to include in the plot:

- Add Legend – Adds a description for all visual elements in the plot

- Add Observed Data – Overlay observed data points

- Theoretical Percentiles Median – Display the model's predicted median

- Theoretical Percentiles CI – Display the model's confidence intervals around the theoretical percentiles

- Empirical Percentiles – Show observed percentiles based on the data

- Interpolation – Smooths the lines connecting the points across bins

Plot Options

- Axis Labels – Customize the names of the x-axis and y-axis

- Log Scale for Y-axis – Optionally apply a logarithmic scale to the Y-axis for better visualization of wide-ranging values

8. Prediction distribution

The Prediction distribution tab allows you to visualize the distribution of model predictions and assess how well they reflect the observed data across the selected output. This helps evaluate the spread and central tendency of predictions, and can be especially useful when exploring variability or stratification.

Getting Started

To begin, click the button. This step loads the prediction results from the fitted model into the tab.

Configuration Options

-

Select Output – Choose the output variable you wish to analyze. The available options are determined by the DVIDs selected in the Model section

-

Stratification by Dose – If the dataset contains a

DOSEcolumn, you can enable this option to generate separate prediction distributions for each dose group

Display Options:

-

Prediction Interval – Select the confidence interval to display around the predictions. Available options include: 50%, 80%, 90%, 95%

-

Legend – Include a legend for clarity when comparing multiple groups or overlays

-

Data – Overlay the observed data on top of the prediction distribution

-

Axis Labels – You can customize the x-axis and y-axis names to better describe your data and outputs

After completing the graphical evaluation in the GoF plots section, if the diagnostic plots indicate a satisfactory model fit—without major bias, trends, or significant outliers—you can confidently proceed to the next steps. These include exploring covariate effects in the Covariate Search section or using the model for predictive purposes in the Simulations section.

Covariate search

The Covariate search section allows users to systematically test potential covariates for inclusion in their model, helping reduce unexplained variability and improving the model's interpretability and predictive performance.

📌 Note: Before working in this section, ensure that the project has already been fitted or that results from a previous fit have been loaded in the Task section.

Once this is done, the Covariate search section in NLME becomes available to be used:

Begin by selecting the parameters to which covariates will be applied. This is done in the Select parameters dropdown. Once selected, click the

1. Covariate specification

After clicking

-

Covariate: Choose the column from your dataset to test as a covariate.

-

Covariate type: Specify whether the covariate is Continuous or Categorical.

If Continuous

-

Select the Function to define how the covariate influences the parameter:

- Linear (lin): $$\theta_i = \theta_{ref} \cdot (1+\beta \cdot (x_i-x_{ref}))$$ A direct linear relationship between the covariate and the parameter.